A project of the Robotics 2019 class of the School of Information Science and Technology (SIST) of ShanghaiTech University. Course Instructor: Prof. Sören Schwertfeger.

Weihong Tang , Renzhi Tang

Introduction

We have found the ROS driver of ladybug5+, which has some bugs. Then we are going to fix them and access the JPEG data of the 6 cameras of the Ladybug5+ in the Linux operation system. In addition, we have provided a launch file for image splitting and another launch file for stitching panoramic images, which we can combine with the lidar data to generate colored point cloud in the future. Finally, we have record 3 datasets in our campus. Two of them is mainly for the underground parking garage and the rest one is for our campus.

Figure 1: Final car

Description

1. Hardware

Our car project is composed of 3 Ladybug5+. The positions are following:

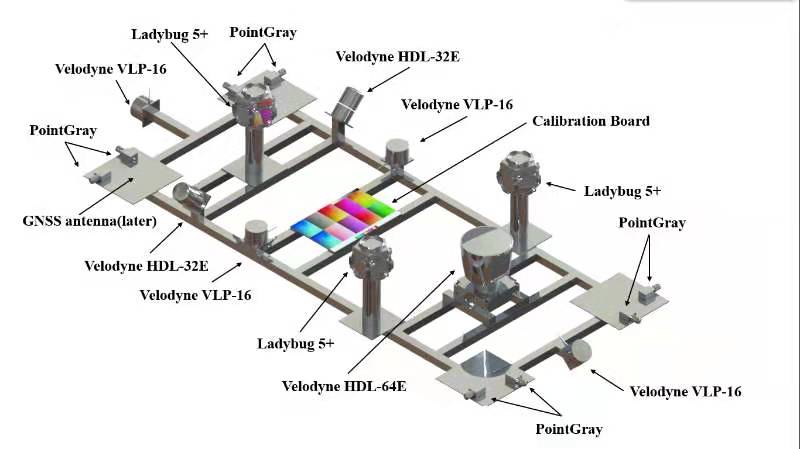

Figure 2: Sensor Layout

Finally, the hardware will be used to mount on an BYD e6 electric car for data collection.

And the final product is shown in Figure 2.

Figure 3: Final hardware

2. Software

2.1 Data Acquisition

And for record the JPEG compressed data in ROS, no message type can be used to transport ladybug image data between ROS nodes. So we define a new message type in ROS called Ladybug5PImage, which contains all information from the raw image data. For example: compressed 4 channel image data ,timestamp, GPS information and so on. Instead of publishing processed images using senser_msg/Image message type, we publish the raw data for meeting the requirement of frequency(30Hz).

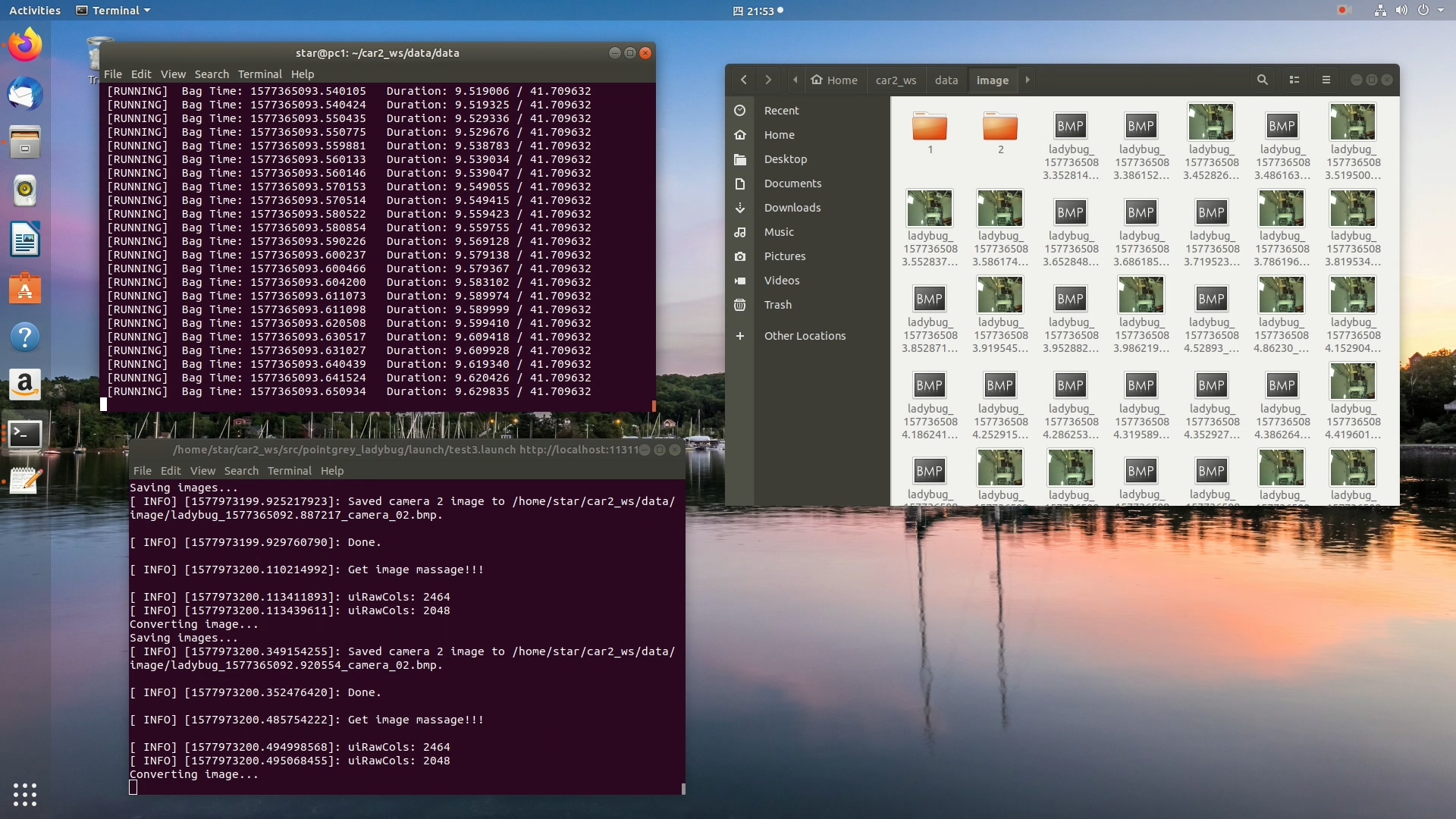

2.2 Image Split

Splitting images function has also been realized in our driver which is shown in Figure 3. After recording data, replay the rosbag and the driver can split the special format compressed JPEG data into 6 individual images. We publish the 6 individual images in 6 different topics in ROS and we also reserve the interface for saving image which can be seen obviously in the c++ code file. It is for future use and in this project we did not use it.

Figure 4: Image spliting

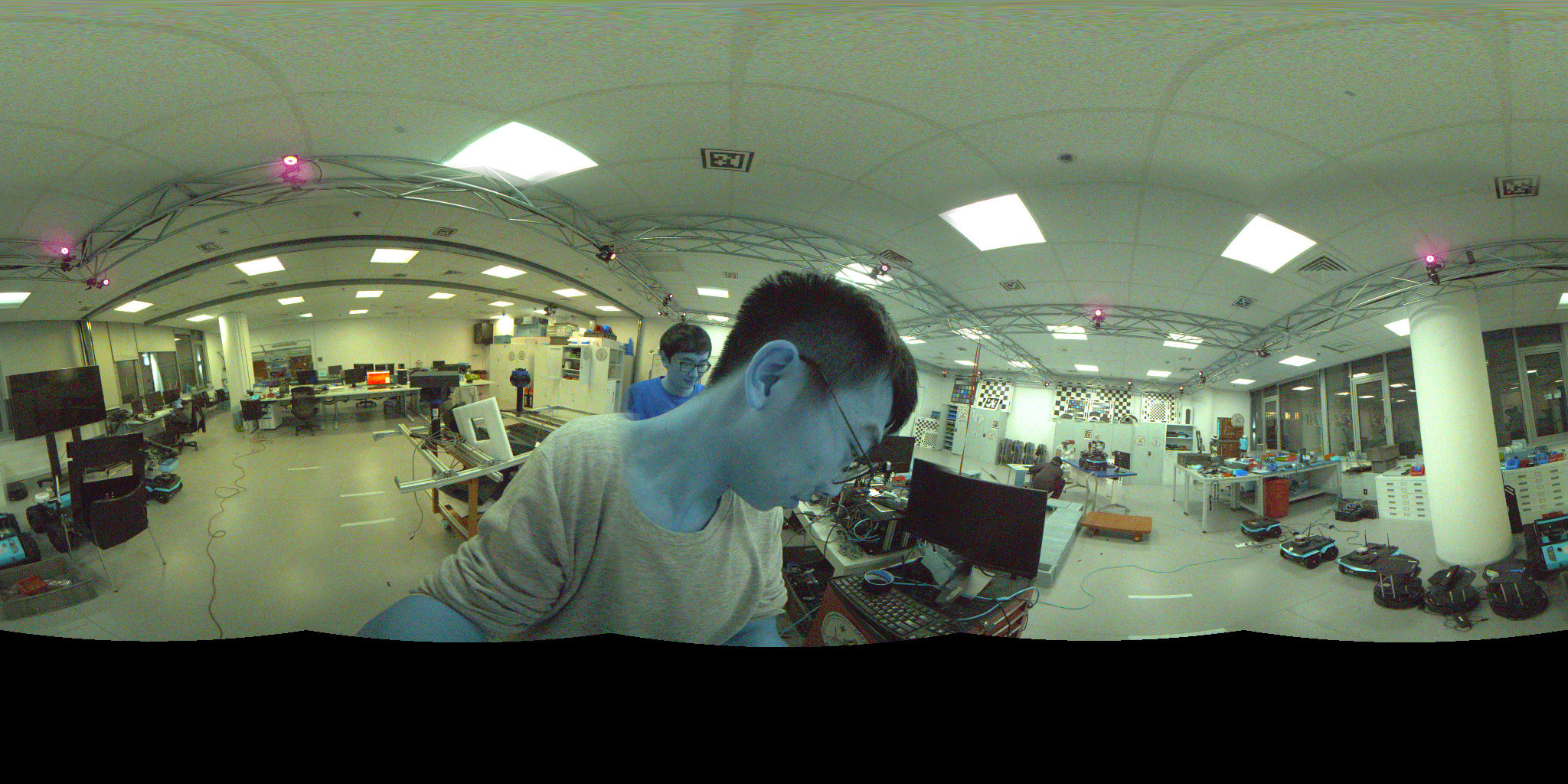

2.3 Image Stitch

Stitching 6 images to a panoramic image is generally using the API in Ladybug SDK called ’ladybugUpdateTextures’ and ’ladybugRenderOffScreenImage’. We think it will be used in the future to run the SLAM using panoramic images or combine the panoramic image with LiDAR data to build the colored pointcloud. The panoramic image can also be published in ROS and the interface for saving is also reserved in the c++ code.

Figure 5: Panoramic image

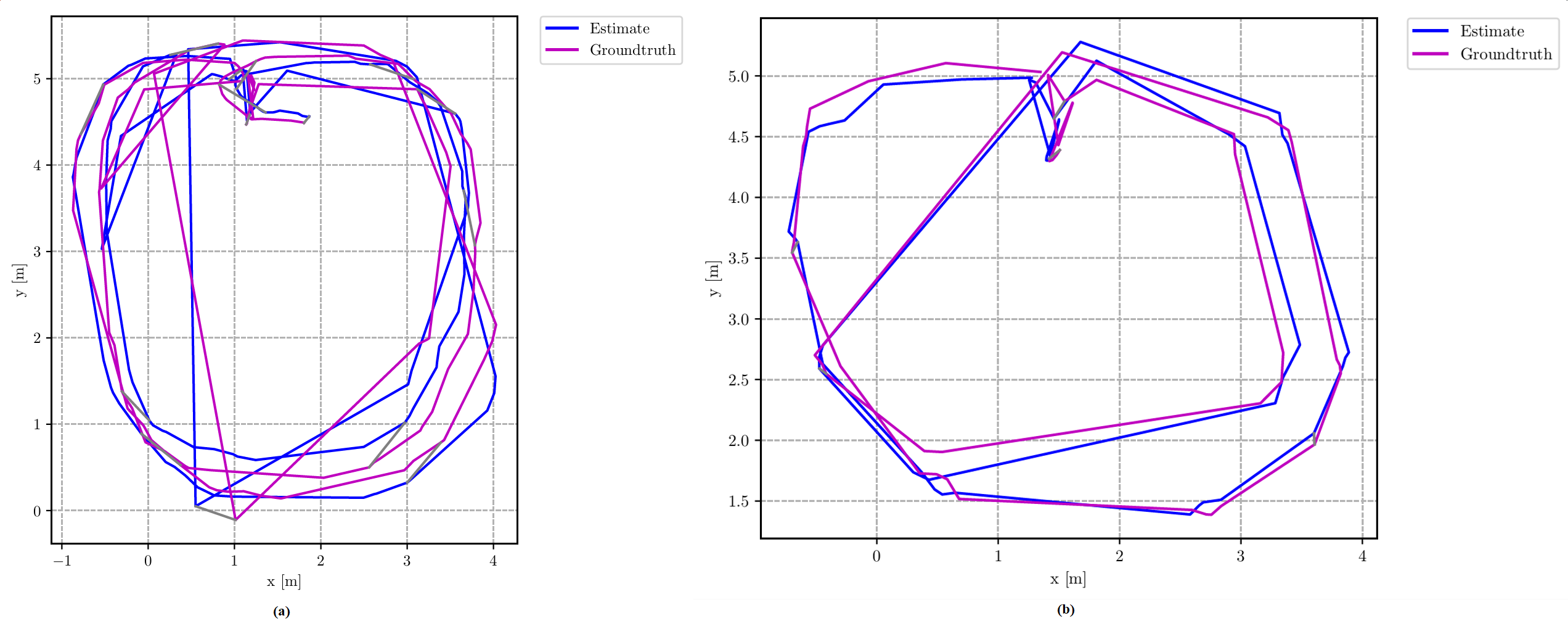

3. Testing in Lab & ORB_SLAM2

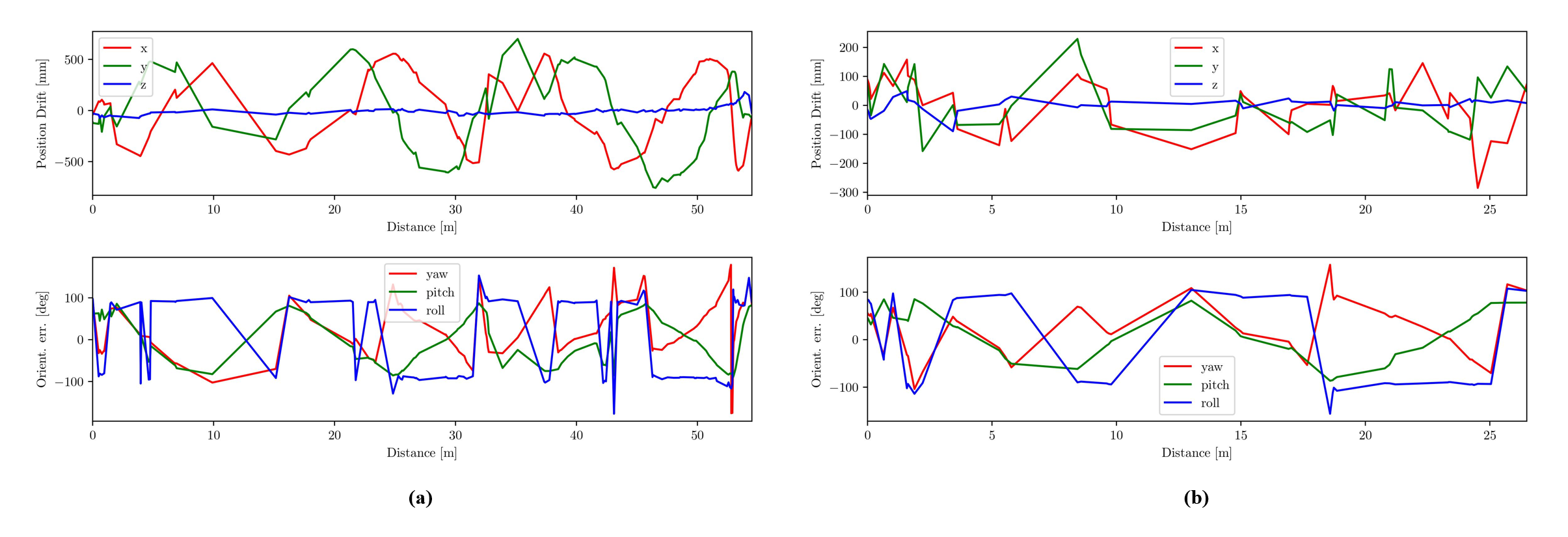

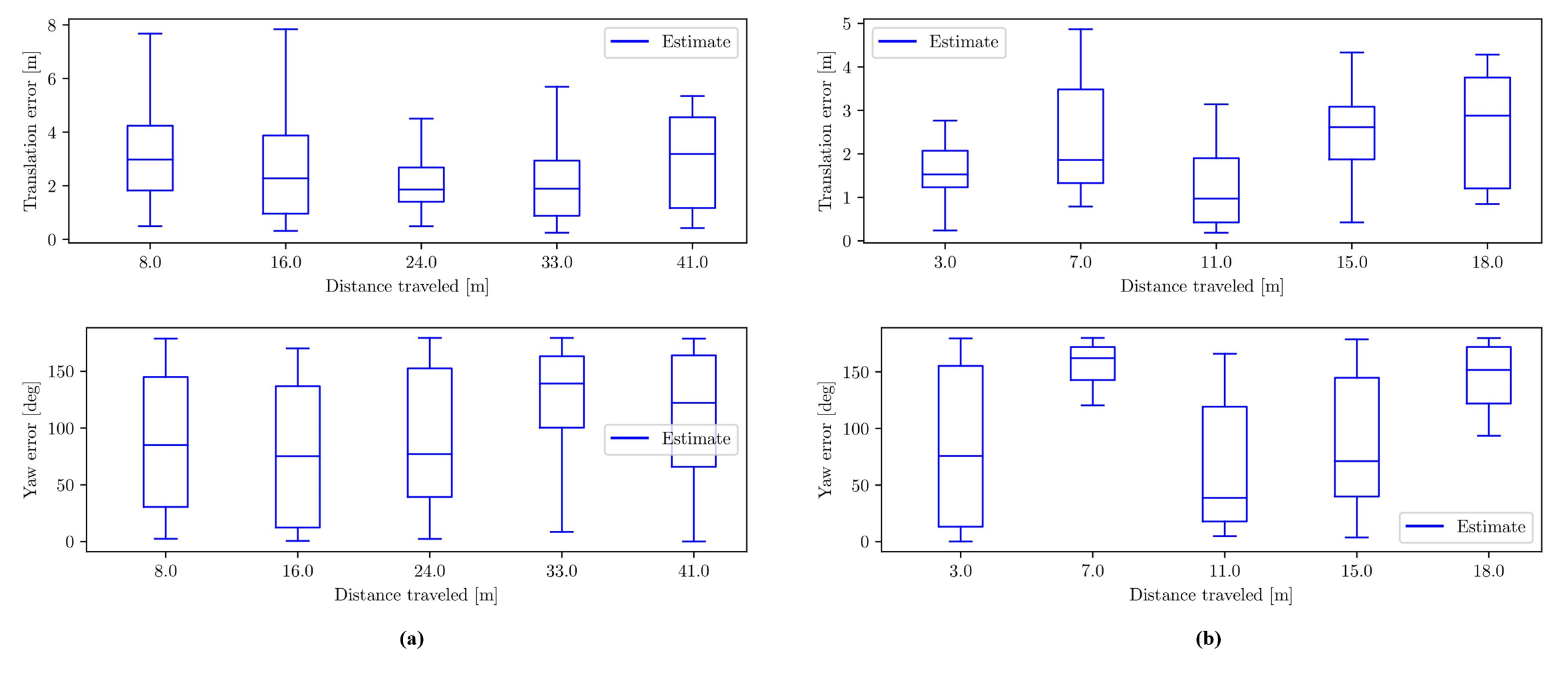

The way we recording data in lab is in the provided video. And the result of ORB_SLAM is shown in the pictures below.

Figure 6: Trajectories for in lab ORB-SLAM2 (a)Data1 (b)Data2

Figure 7: Absolute error for in lab ORB-SLAM2 (a)Data1 (b)Data2

Figure 8: Relative error for in lab ORB-SLAM2 (a)Data1 (b)Data2

4. Data Recording in Campus

In the last day, we record data in our campus. And finally get 3 datasets including one outdoor and two indoor.

Figure 9: Outdoor dataset route

Conclusion

Firstly, we write a driver for ladybug5+ with ROS which aims to acquire images at frame rate of 30Hz. Also we write a new message type for publishing raw data acquired from ladybug5+. A launch file for splitting raw data to 6 images and a launch file for stitching panoramic images are attached in our project. Secondly, we record 2 bags of data to do ORB-SLAM2 and the evaluation for the SLAM has done in our report. The panoramic images will be used to generate the colored point cloud by combining with lidar data in the future. And we finally record the 3 dataset in our campus together with the LiDAR group.