Learning to Walk: Using Reinforcement Learning for Humanoid Robot Mobility

Authors: Zijie Zhu, Xi Zhang

Date: November 2024

Introduction

Imagine a robot that can walk and stand just like a human. This project focuses on teaching a humanoid robot to walk autonomously, move across uneven terrains, and even switch between standing and walking. Our ultimate goal is to develop a robot that can perform these tasks in real-world environments, without relying on external support or guidance. This is made possible through advanced technologies like reinforcement learning, simulation environments, and real-time control.

What Is Reinforcement Learning?

Reinforcement learning (RL) is a type of machine learning where an agent (like our robot) learns to perform tasks by receiving rewards or penalties based on its actions. In this project, the robot learns to walk, maintain its balance, and switch between different actions by interacting with a virtual environment. The more successful the robot is, the more rewards it gets, encouraging it to continue improving.

The Walking and Standing Robot

Our robot, named Dora2, has been trained to walk, balance, and stand independently. This means that Dora2 can move forward, backward, and even turn on its own, just like a human would while walking. Additionally, it can stand still without any external help or supports. It’s a versatile system that can transition seamlessly between standing and walking, adapting to different environments.

Key Features:

- Walking and Turning: The robot can walk in different directions, even turning while maintaining its balance.

- Autonomous Standing: After learning to walk, Dora2 was also trained to stand without falling, even in challenging situations.

- Real-World Readiness: We test our robot in both virtual environments and real-world settings to ensure it performs well outside of simulations.

How We Train the Robot

The robot learns its movements through simulations in a computer environment. We use a technique called "domain randomization," where we simulate different scenarios to prepare the robot for real-world uncertainties. The robot is trained using powerful computers in issac gym which can run thousands of simulation environments in parallel.We test the policy in simulate real-world physics to help the robot understand how to balance and move without falling.

Once the robot learns how to walk and stand in the simulation, we test it in real environments. This step is crucial to ensure the robot works well in the real world, even with the challenges of unmodeled dynamics and environmental factors.

Simulations:

- Isaac Gym: We use a fast simulator to train the robot's movements quickly.

- MuJoCo: A more accurate simulator that helps us test the robot in a more realistic physics environment.

- Gazebo: A virtual world where we simulate real-world conditions, like uneven terrains, to check how well the robot performs.

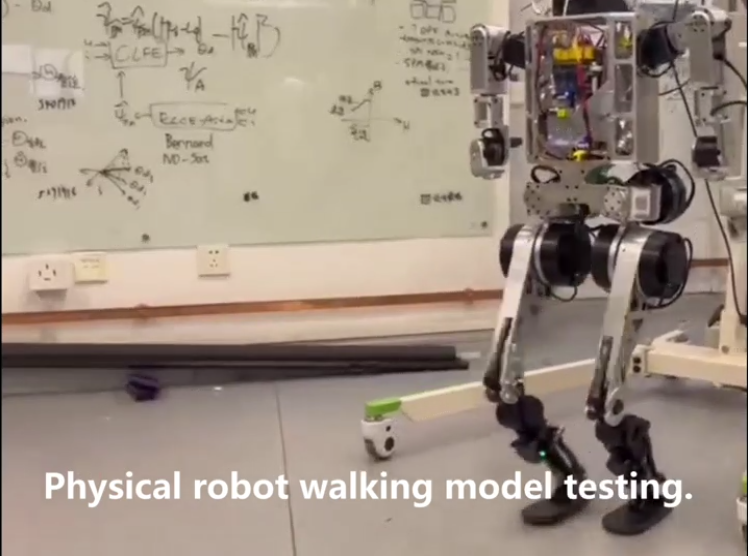

Real-World Testing

After training the robot in simulations, we move to real-world testing. This is where things get challenging. During real-world testing, we found a few issues, such as the robot falling during some maneuvers. But through iterative adjustments and retraining, we made the robot more robust, ensuring it can handle real-world challenges without damaging itself.

Achievements and Future Work

The robot has made remarkable progress:

- It can walk in real world.

- It can stand stably.

- It is now ready for more advanced tasks and real-world applications, such as assisting people in difficult environments or performing industrial tasks.

Future Plans:

We plan to keep improving the robot’s walking and standing abilities, focusing on making it even more stable, faster, and efficient in real-world conditions. We also aim to integrate sensors that can provide feedback on the robot’s surroundings, making it even smarter and more adaptable.

Video and Pictures

Watch the robot in action as it walks and standing in Video folder and demo.mp4.

Conclusion

By teaching a robot to walk and stand autonomously, we are moving closer to developing machines that can interact seamlessly with the world around them. With continued improvements, robots like Dora2 could one day assist people in daily tasks, enhance automation, and even explore places that are difficult or dangerous for humans to reach.