Dexgrasp

This study presents a novel multi-modal approach for precise object manipulation using robotic arms, integrated with advanced computer vision techniques

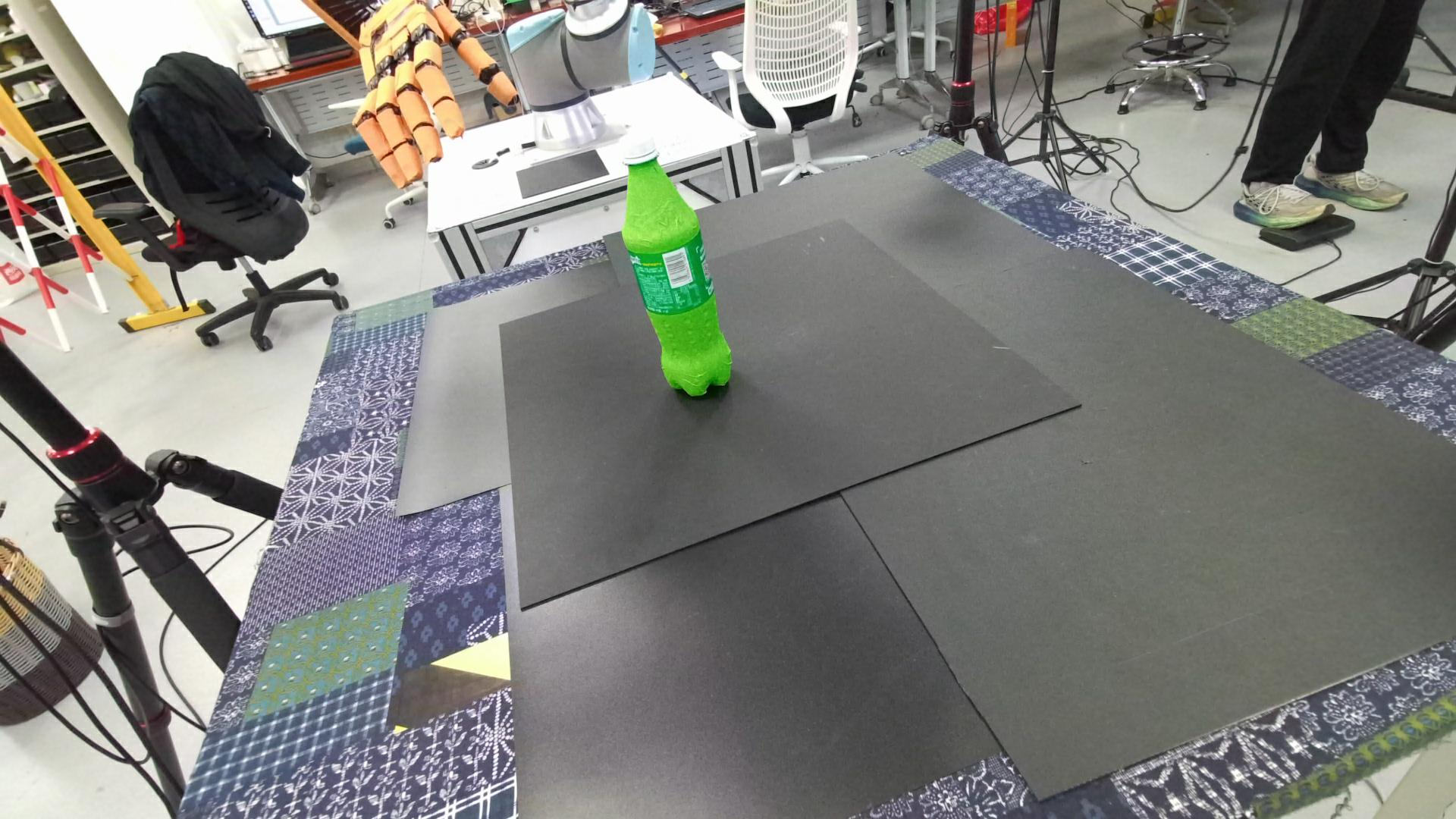

1. System Setting

The system employs a configuration of four Kinect sensors surrounding a desktop area. This setup captures both RGB and depth information from multiple angles, ensuring comprehensive visibility and data collection of the tabletop environment. The Kinect sensors are calibrated to synchronize their feeds, allowing for simultaneous capturing of the scene from different viewpoints.

2. Experiments

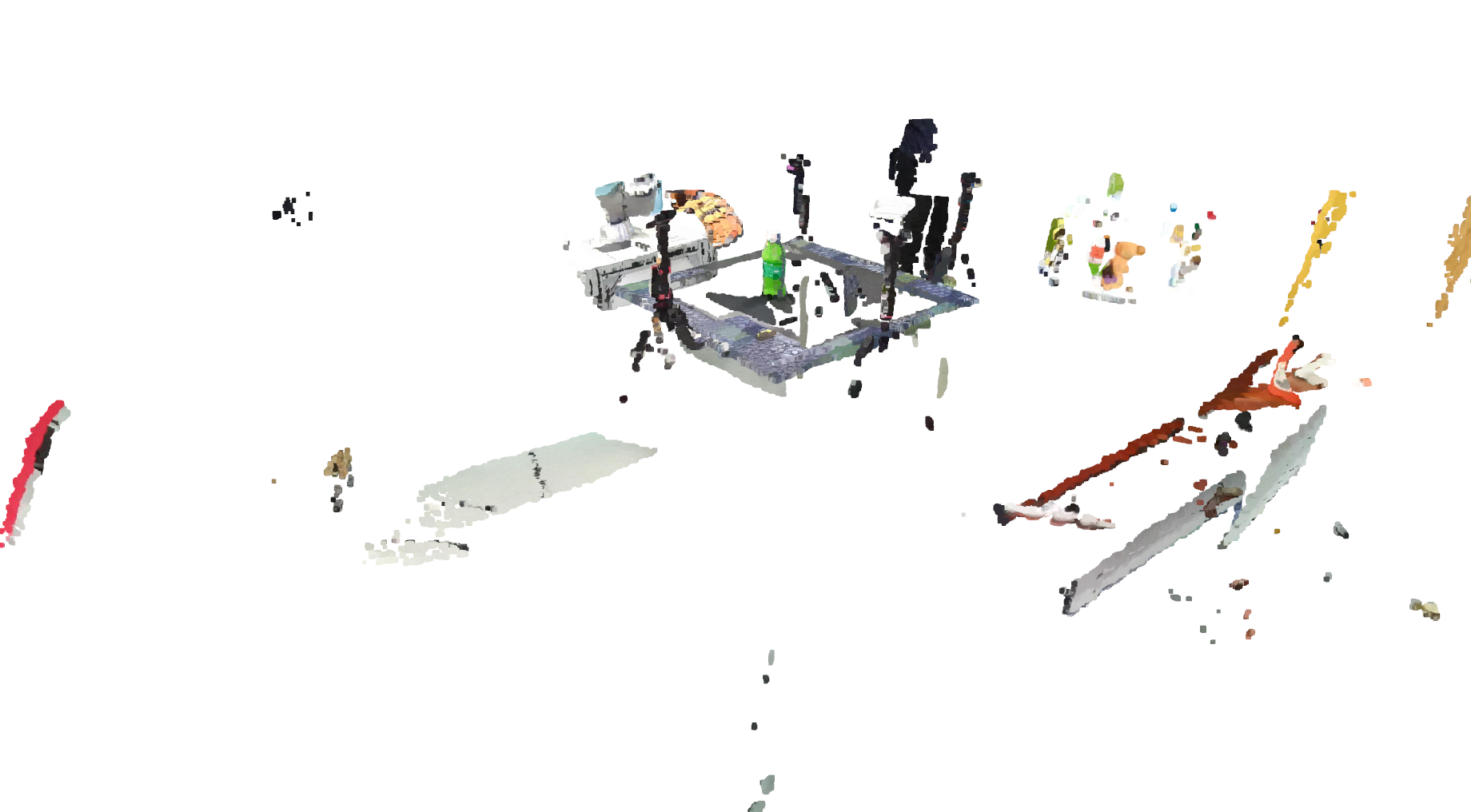

First, we use Kinect to obtain point clouds with color information. It consists of an RGB image and a depth image.

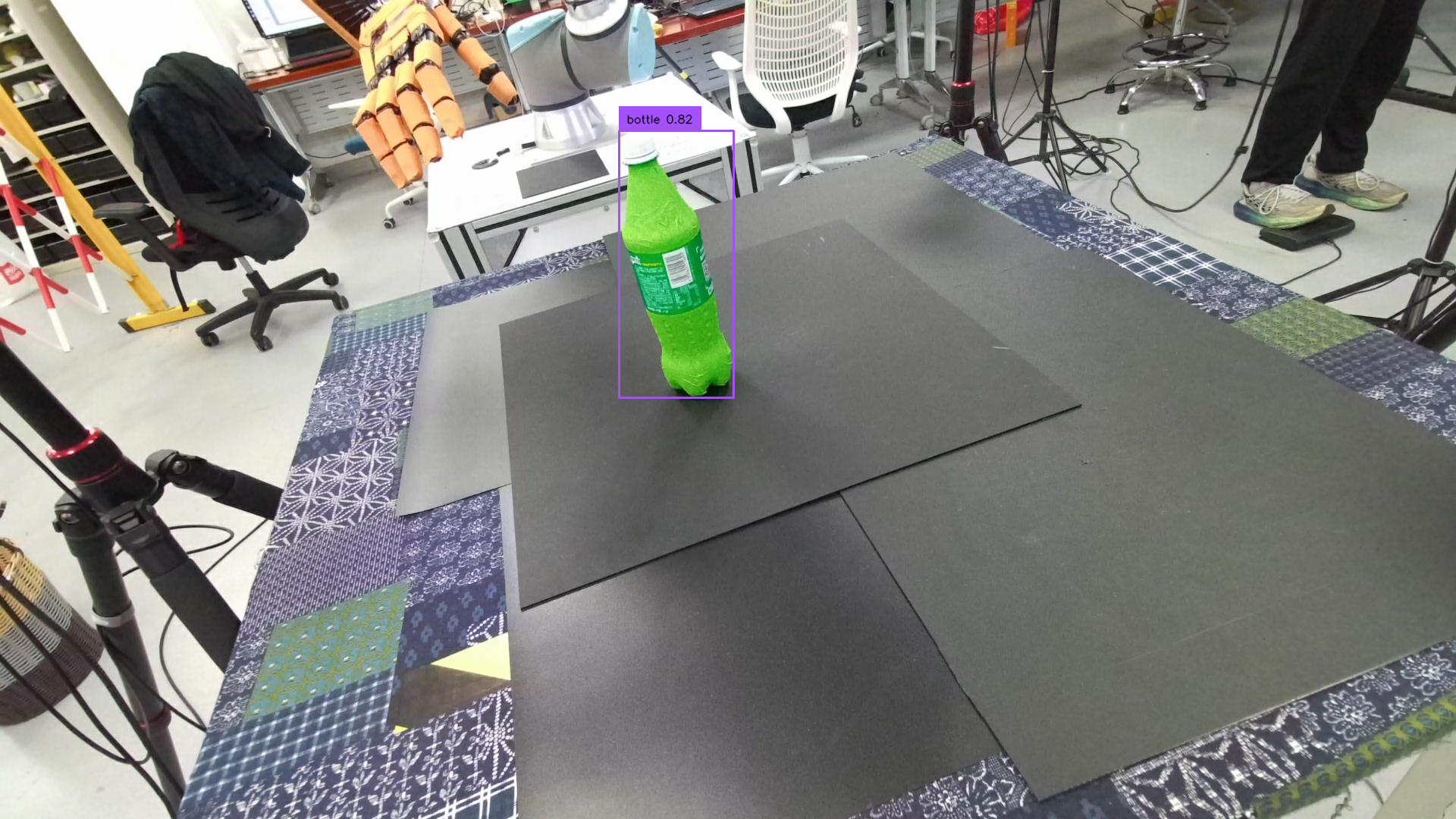

We input the possible semantic information of objects and the captured RGB image into the LLAVA large language model. The large language model will analyze the semantic information of the object in the image and tell us what the object is in the image.

Next, we input the image and object name into the Dino big prediction model, which will segment the image.

Then we get the mask of the corresponding object in the image.

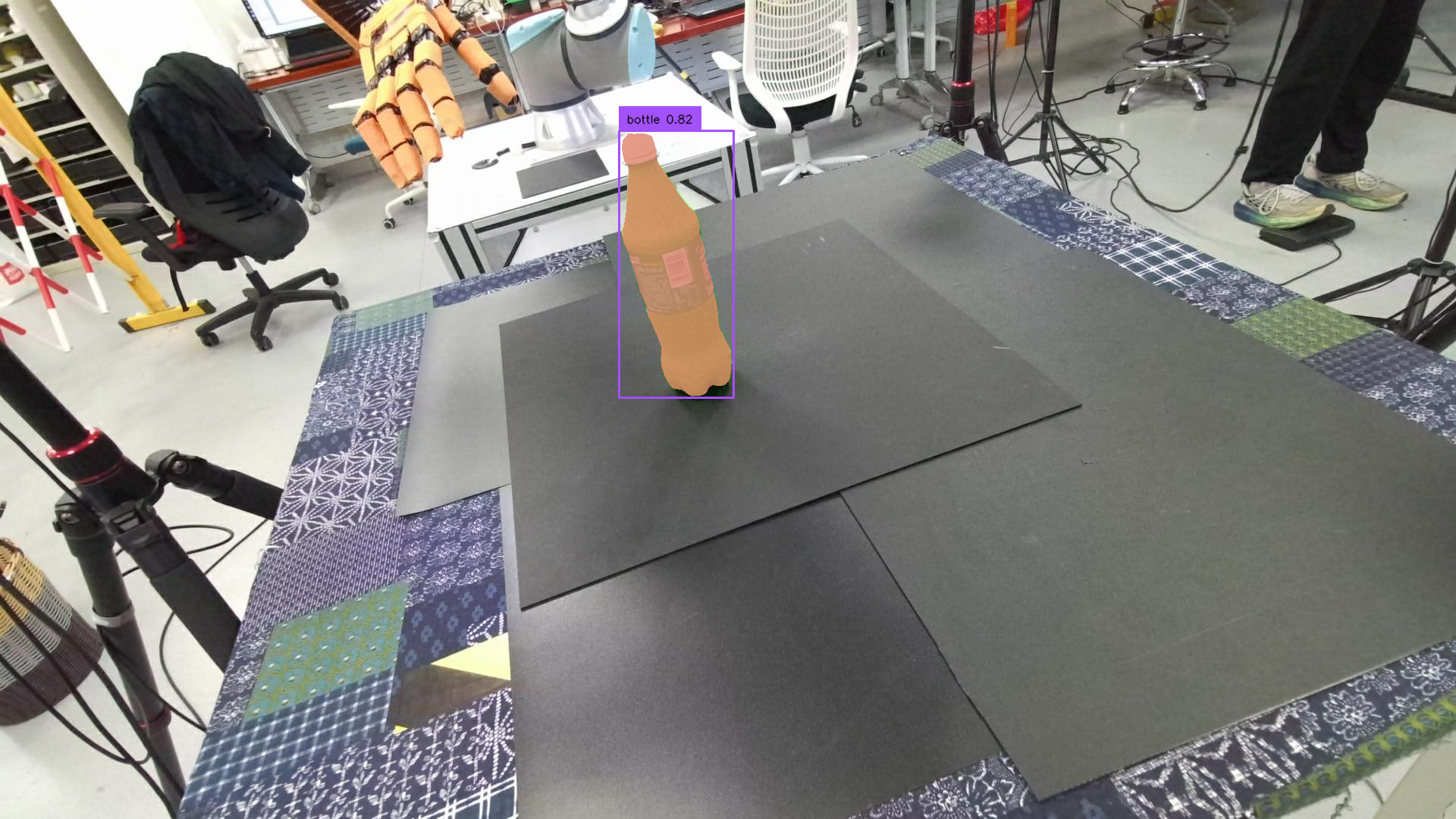

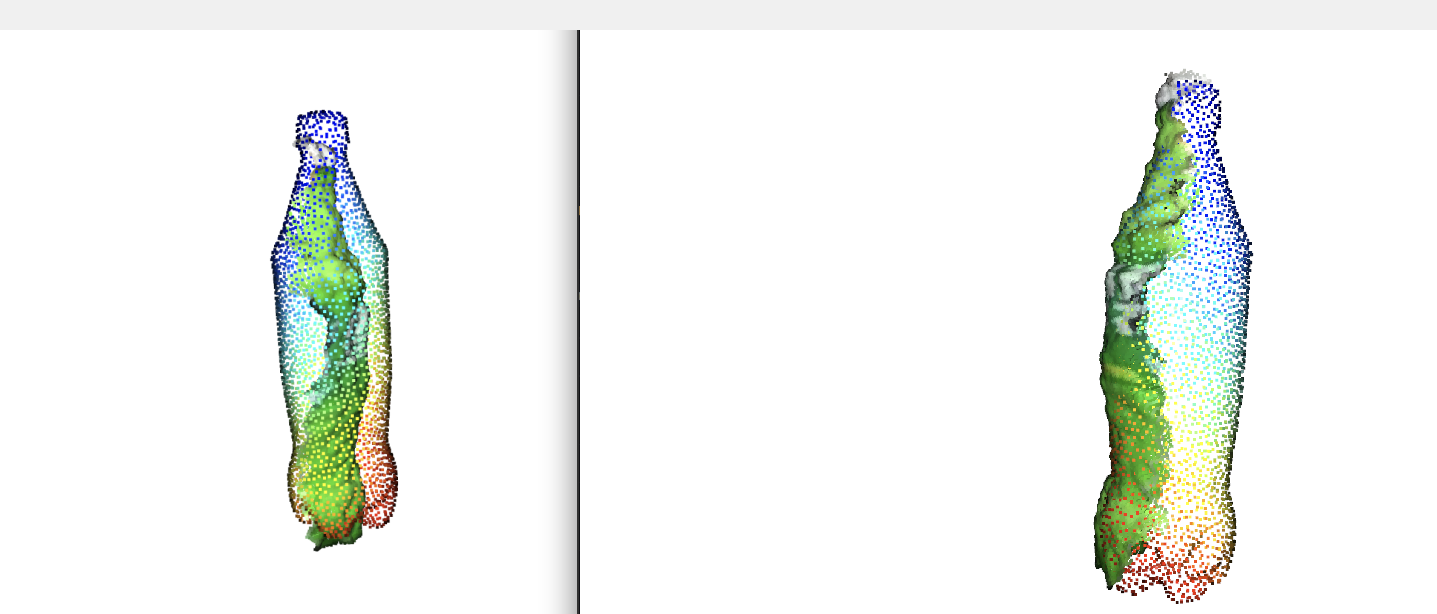

Through the mask, we can segment the original point cloud of the object.

We will match the previously scanned mesh of the object with the point cloud to obtain the object's accurate volume and position in space. (The matching consists of Global Registration coarse matching and ICP matching)

Because the original point cloud of the object collected by the original camera is always incomplete, but with the matched mesh, we can use it to supplement the original point cloud to obtain a complete point cloud of the object.

3. Visualization

We use the point cloud obtained by this method as the input of the algorithm in our paper "Towards Human-like Grasping for Robotic Dexterous Hand" to complete the motion planning of the robot, as shown in the video.