Grounding Large Language Models for Long-horizon Robot Mission Planning in OsmAG

Zhang Jitian, Ren Wanqing, Ma Xu

Abstract

Large Language Models (LLMs) have demonstrated impressive language understanding abilities and have been applied to a variety of domains beyond traditional language tasks. These include dialogue systems, visual understanding, reasoning, code generation, embodied reasoning, and robot control. Building on the significant advancements in LLMs for comprehending complex natural language instructions, this project seeks to investigate the use of LLMs in robot mission planning and autonomous navigation, specifically within the information-rich and structured environment of university campuses. The approach involves integrating the text-based map representation, osmAG, with the high-level understanding capabilities of LLMs. By adding descriptive tags to osmAG and utilizing LLMs to interpret these descriptions, we aim to enhance navigational tasks. This method enables robots to handle complex, description-based queries and perform comprehensive path planning based on the map's topological structure.

Introduction

“Make me a coffee and place it on my desk.” The successful execution of such a seemingly straightforward command remains a daunting task for today’s robots. The associated challenges permeate every aspect of robotics, encompassing navigation, perception, manipulation, and high-level task planning. However, recent advances in Large Language Models (LLMs) have led to significant progress in incorporating common sense knowledge into robotics. This enables robots to plan complex strategies for a diverse range of tasks that require substantial background knowledge and semantic comprehension.

This project focuses on integrating LLMs into robotic systems, specifically for mission planning and autonomous navigation in complex and structured environments like university campuses. We employ a text-based map representation, osmAG, which contains both spatial structural information and descriptive information that can be interpreted by LLMs. By introducing a "description" tag in osmAG and leveraging the advanced understanding and reasoning capabilities of LLMs, our system can execute complex navigational tasks based on users' natural language instructions.

Specifically, this research explores two application scenarios: description-based task planning and topology-based path planning. In the first scenario, LLMs use the provided descriptive tags to determine the best destination. In the second, LLMs utilize the topological structure in osmAG to plan paths that include multiple target locations. Both approaches demonstrate the vast potential of LLMs in interpreting spatial descriptions and executing task planning based on those descriptions.

Through this integration, we aim to develop a new type of robotic system capable of understanding complex task requirements, autonomously planning routes, and interacting with human users in a more natural and intuitive manner. This will not only advance the development of robotic technology in autonomous navigation but also open new possibilities for human-robot interaction, enabling robots to play a more significant role in education, research, and daily life.

In an era marked by technological advancements, robotics technology is becoming ever more prevalent across various sectors, enhancing our daily lives and work with new conveniences and possibilities. Inspired by our keen interest in Large Language Models and fueled by our participation in robotics courses, this project aims to apply our deep understanding of robotics principles to innovative task planning for robots.

Experiment

- Please take me to Shen Yongxia's office

- Please bring this book to Xinzhe Liu

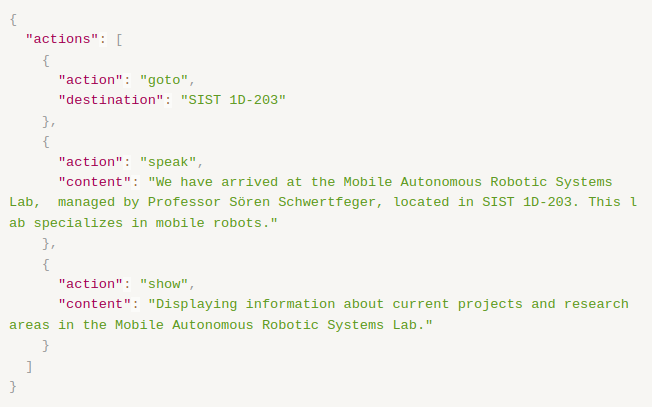

- I am very interested in mobile robots. Please take me to visit a related laboratory.

- I don't know much about ICP. Please take me to a professor's office to ask for advice.

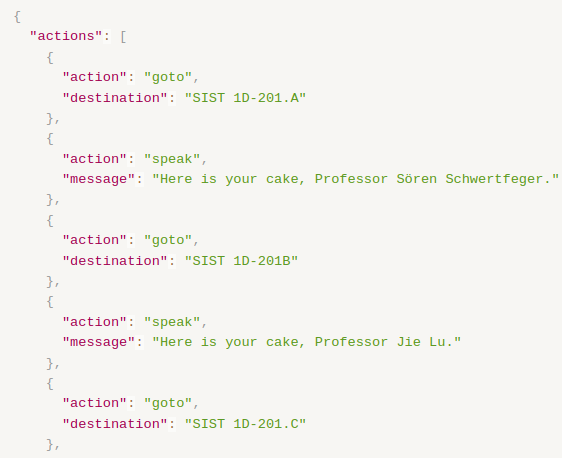

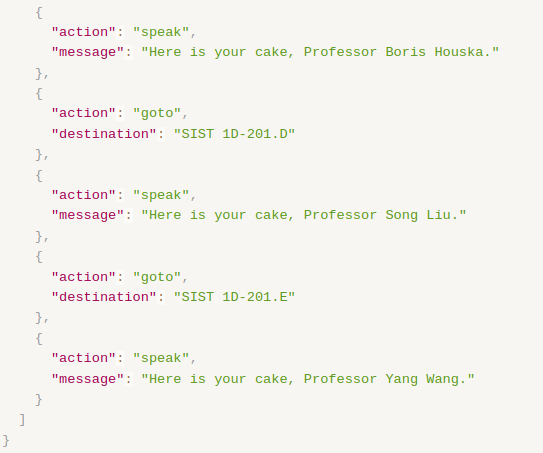

- Please send cakes to all professors' offices on the second floor in area D.

Conclusion

In this project, we successfully utilized a large language model to achieve mission planning and tested its capability as a delivery robot in a simulated environment. By assigning the robot the task of delivering ten cakes to professors' offices on the second floor of the Star Center, we validated the large language model's ability to interact and make decisions in complex environments.