Kiwifruit Harvest Project Report

Group: Kiwi Group

- Member 1: Yanming Shao, shaoym2023@shanghaitech.edu.cn

- Member 2: Xiyan Huang, huangxy20232@shanghaitech.edu.cn

Date: January 28, 2024

1. Introduction

Conventional fruit harvesting is labor-intensive. Kiwifruit cultivation, for example, heavily relies on seasonal manual labor. This creates an economic need for robotic harvesting solutions to address the challenges of human labor. Field harvesting robots are in demand, but face challenges in areas like computer vision, mobility, control, and manipulation technologies.

Developing a fruit-harvesting robot for a course project presents several challenges:

- Environment Uncertainty: Robots operate in unstructured environments requiring robust algorithms.

- Vision-Based Decision Making: Requires synchronizing vision and motion for path planning and obstacle avoidance.

- Software and Hardware Integration: Demands integration of image processing, control algorithms, sensors, grippers, and robotic arms.

This project tests technical skills and the ability to integrate diverse systems.

1.1 Our Approach

We built a kiwi harvesting robot using a Bunker mobile platform and a Dobot CR5 arm. An NUC with Ubuntu 20.04 serves as the computation host. A Realsense RGBD camera captures images. Point cloud data is processed using the Grasp Pose Detection (GPD) module. The arm's motion is planned using RRT via MoveIt!, and a separate script controls the gripper. The Computer Vision module uses a lightweight GPD pipeline with a CNN to generate and score grasp poses. Communication is handled via ROS, integrating rospy and C++ scripts.

1.2 Related Works

Some recent works use different mechanisms like a "tube" for transport or examine various end effectors. Our approach uses a gripper, requiring precise motion planning. While some methods use heavy reconstruction algorithms, we opted for a lightweight, end-to-end pipeline that doesn't require intermediate image segmentation.

2. System Description

Our mobile robotic system (See Figure 1) consists of:

- NUC: The compact computational host.

- Bunker: A holonomic mobile platform for navigation.

- Robot Arm: A six-DoF arm for precise positioning.

- Two-Finger Gripper: Parallel gripper with controllable speed, force, and position.

- LIDAR and Depth Camera: For mapping, navigation, and real-time point cloud retrieval.

Figure 1: Our Robotic System

3. Method

Our method involves four main steps: 1) point cloud retrieval and analysis, 2) grasp pose generation, 3) coordinate transformations, and 4) motion planning.

3.1 Computer Vision and Grasp Detection

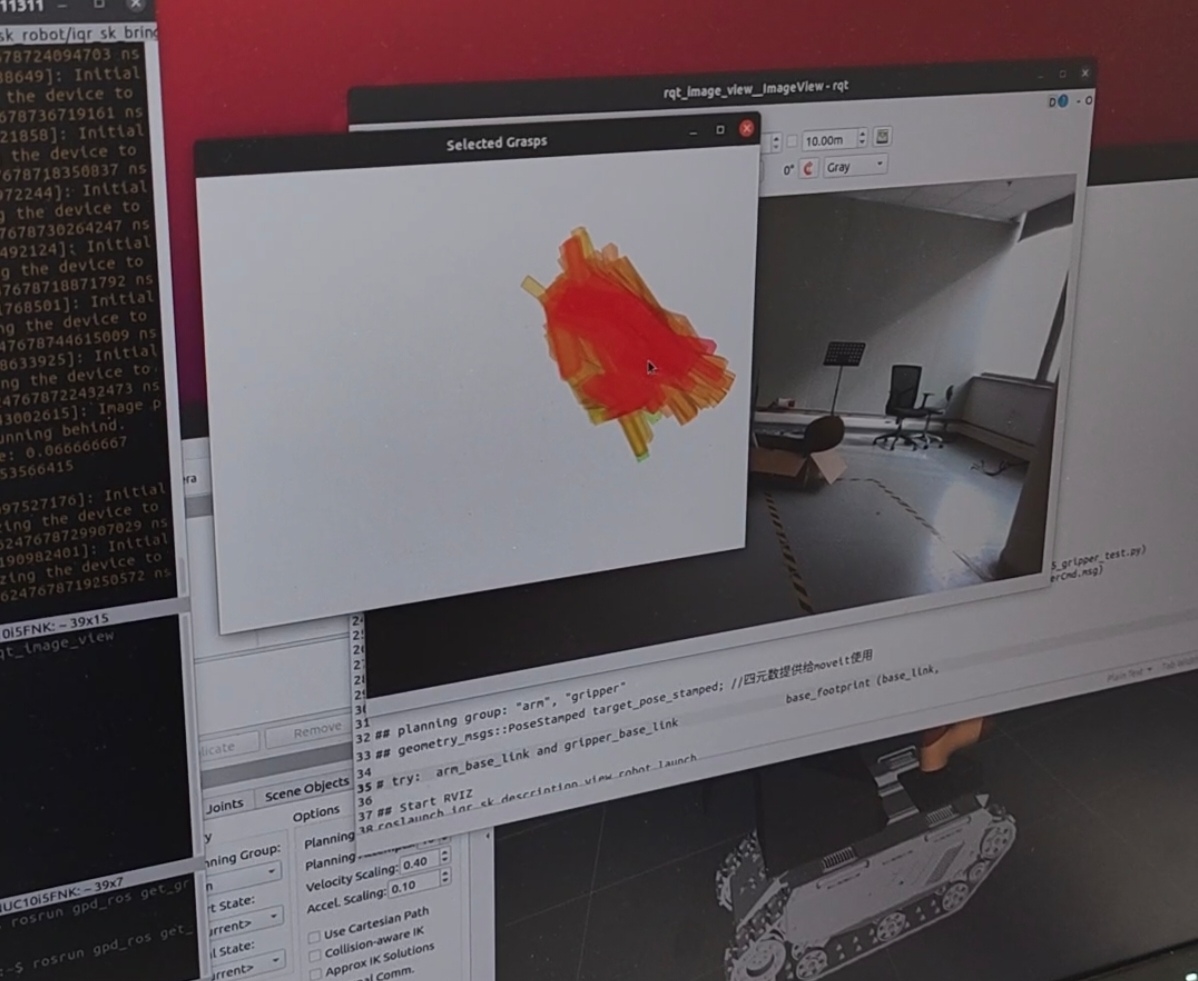

Determining the correct grasp pose is crucial for adapting to different fruit sizes and orientations. We use a Convolutional Neural Network (CNN) within the Grasp Pose Detection (GPD) framework to process 3D point cloud data and generate reliable grasp proposals. Figure 2 visualizes grasp candidates, with deeper colors indicating higher scores.

Figure 2: Grasp Proposal Visualization

The process includes:

- Preprocessing: Raw point cloud data is cleaned (noise removal, voxelization, filtering).

- Candidate Generation: Thousands of potential grasp poses are sampled from the point cloud.

- Feature Extraction: Geometric features are extracted for each candidate.

- Classification: A pretrained classifier scores each candidate's viability.

- Post-processing: Top-ranked poses are refined, checked for collisions and reachability, yielding a final grasp pose.

3.2 Grasp Motion Planning and Gripper Control

3.2.1 Coordinate Transformation

A rospy script transforms gripper coordinates to the camera's coordinate system (position vector and rotation quaternion). ROS's tf library is used for accurate spatial transformations.

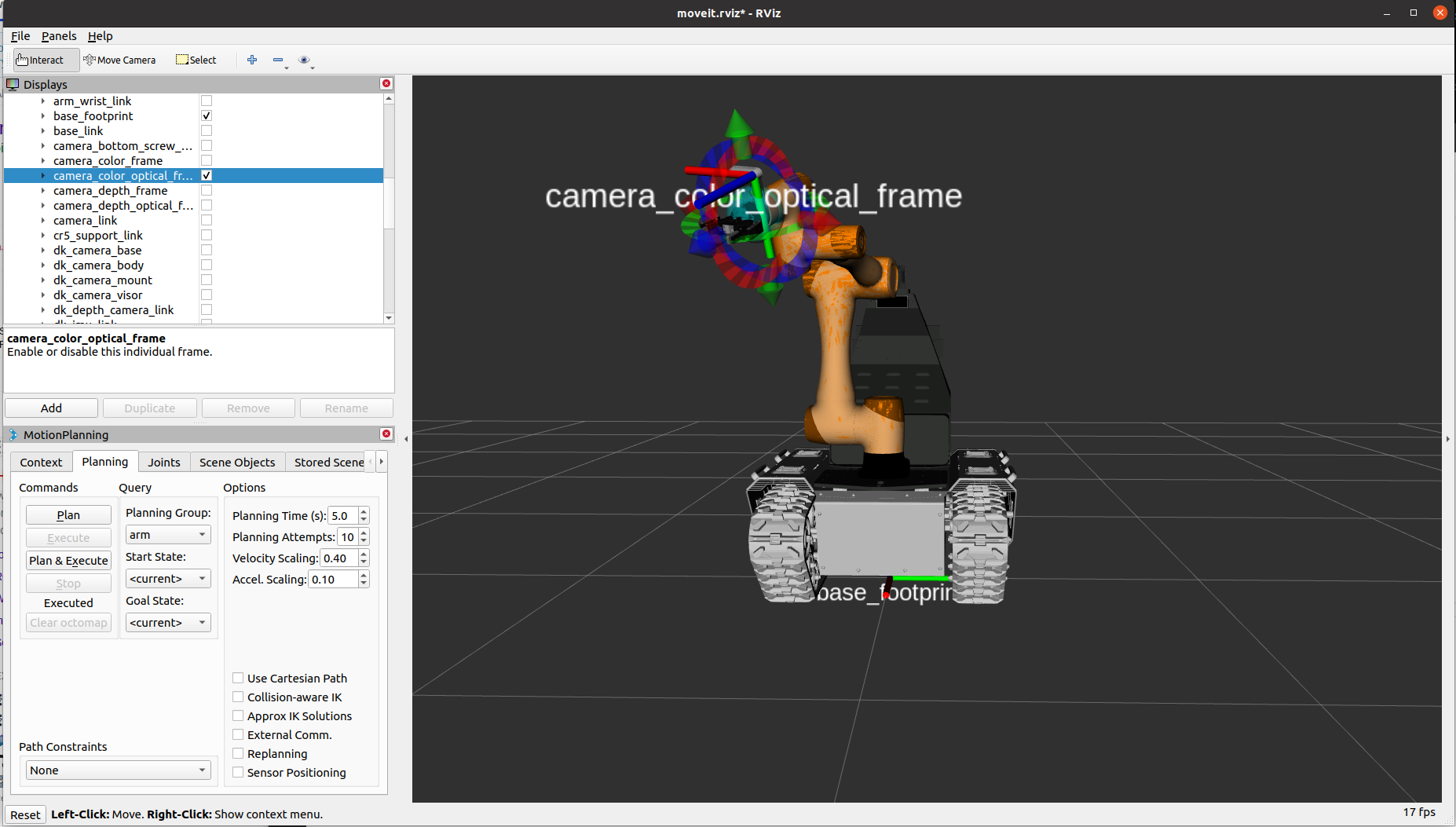

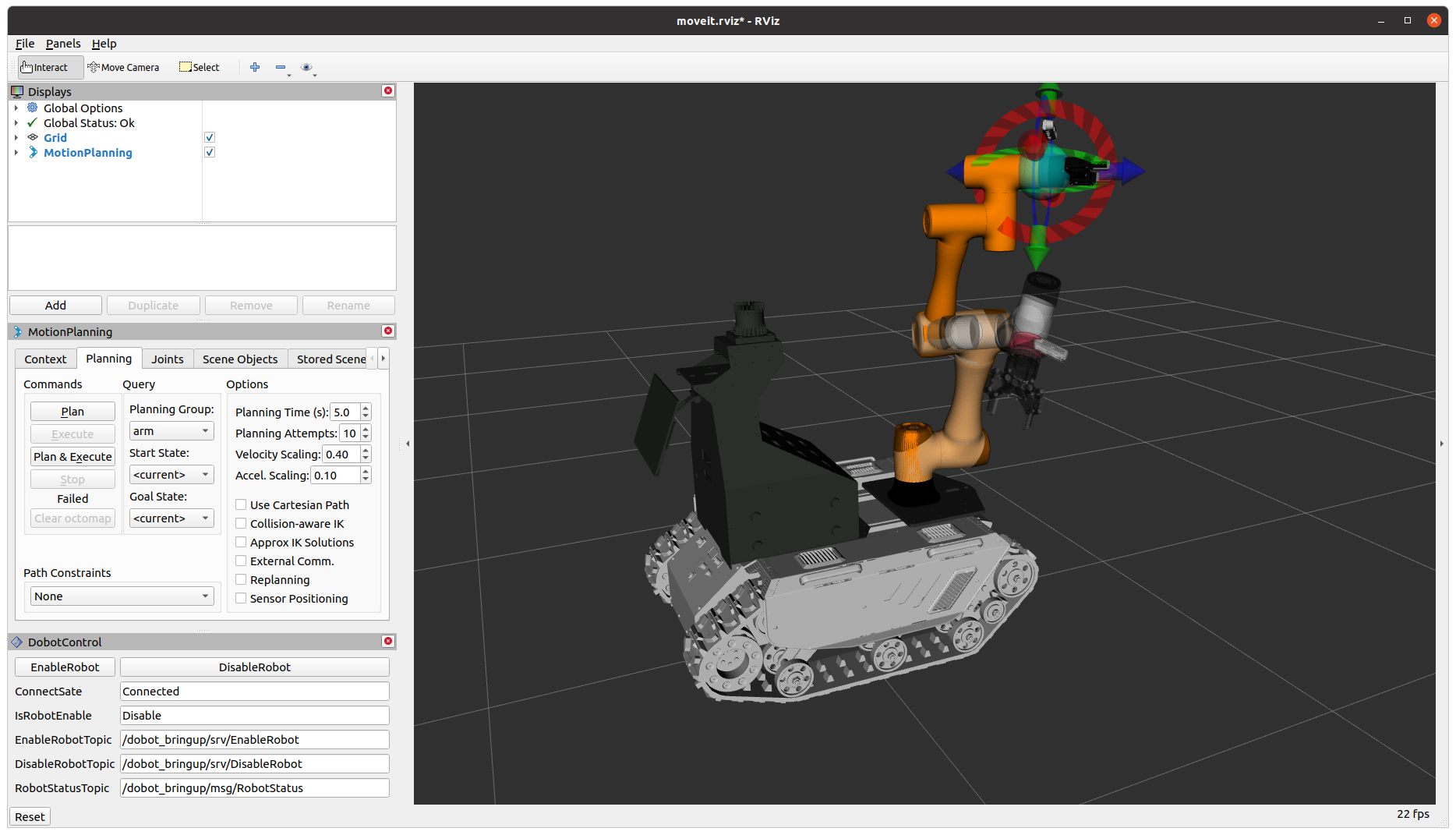

3.2.2 Integration with MoveIt! and Motion Planning

MoveIt! controls the Dobot CR5 end-effector position (See Figure 3). Coordinates are transformed from the camera frame to the robot's "base footprint" frame. The Rapidly-exploring Random Tree (RRT) algorithm plans complex motion paths. A separate rospy script controls the gripper, using 0.1 N force to grasp fruit.

Figure 3: MoveIt! and rViz

4. Results and Demo Videos

4.1 GPD Visualization

The following video demonstrates our Grasp Pose Detection (GPD) system in action, showing how the algorithm identifies potential grasp points on kiwi fruits.

4.2 Gripper Operation

This video shows the gripper operation during the fruit harvesting process.

4.3 Complete Demo

A complete demonstration of our kiwi fruit harvesting robot in action.

4.4 Failure Case Analysis

This video shows a failure case and our analysis of what went wrong.

5. Conclusion

Our kiwi fruit harvesting robot successfully demonstrates the integration of computer vision, motion planning, and robotic control. The system can detect kiwi fruits, plan appropriate grasp poses, and execute harvesting actions. While there are still challenges to overcome, this project provides a solid foundation for future development of agricultural robotics.