A project of the Robotics 2023 class of the School of Information Science and Technology (SIST) of ShanghaiTech University. Course Instructor: Prof. Sören Schwertfeger.

Bowen Xu, Tao Liu

Abstract

Cameras and LiDARs provide complementary information of the environment. Cameras capture the color, texture and appearance information, and LiDARs supply the 3D structure information of the environment. Therefore, they are often jointly used to perceive the environment for the robots. We present a general sensor calibration method which can be used out-of-box. In this method, we utilize AprilTags to distinguish the calibration targets. Along with a multi-target calibration system, it can jointly calibrates the target poses in the world frame and the extrinsics of multiple sensors, regardless of the question whether or not their FoVs are overlapping. The calibration target is consist of two reflected tapes and 6x6 AprilTags, which used to give the pose estimation of LiDARs and cameras separately.

Introduction

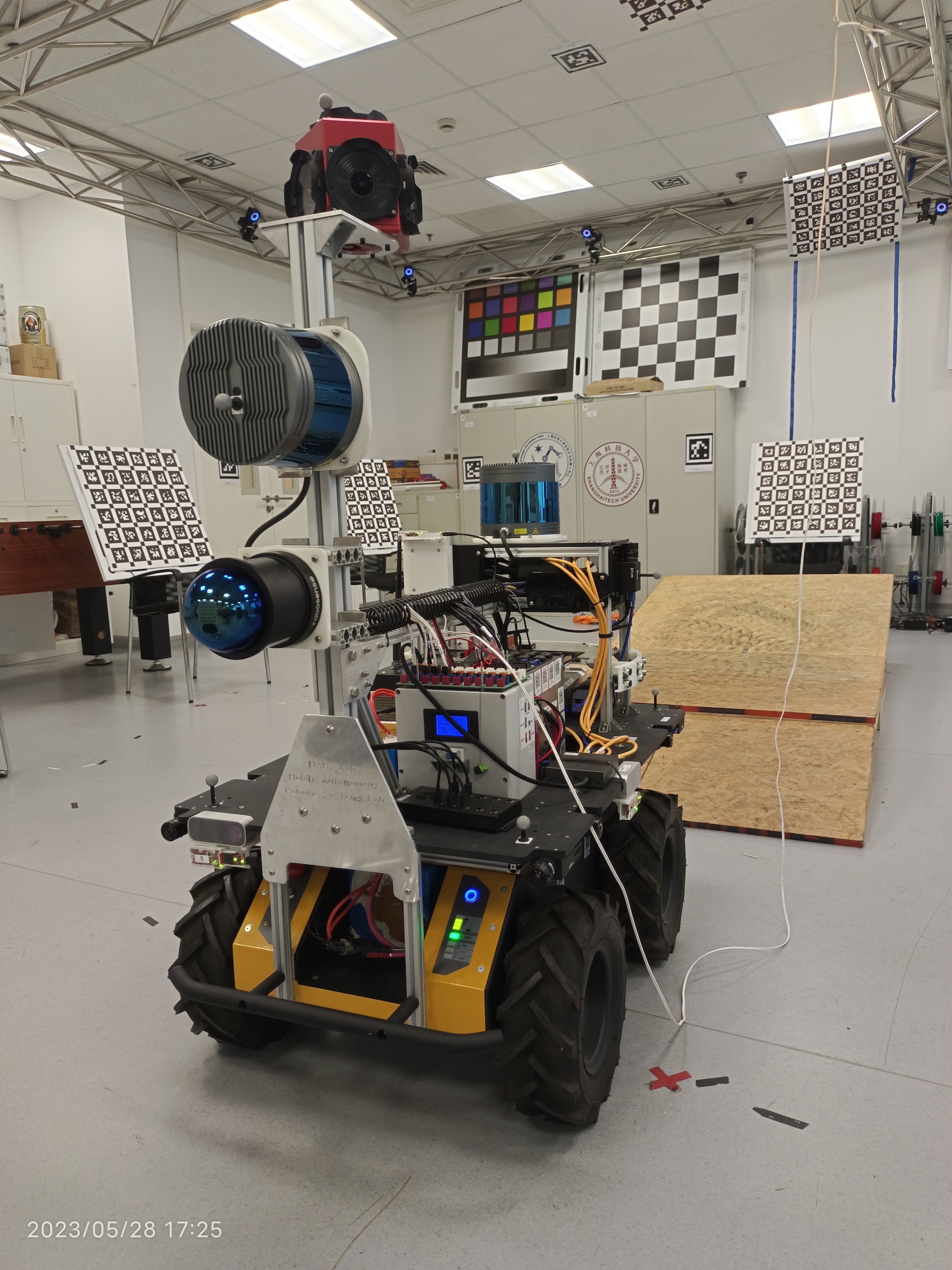

The ShanghaiTech MARS Mapping Robot II is equipped with multiple sensors of various types, covering almost all surrounding direction as well as up-looking and down-looking. In order to make use of such robot for localization or mapping, it is essential to calibrate all the sensors, getting both intrinsic and extrinsic of them. While the intrinsic calibration can be accomplished in a per-sensor style, the extrinsic calibration involves measuring the transformation between sensors. Pairwise extrinsic calibration approaches hardly fits the Mapping Robot II since it has a massive count of 19 cameras, 5 LiDARs, 5 RGBD sensors, 2 event cameras as well as other sensors. In order to achieve high accuracy while save time and labor, we want to calibrate the extrinsic of all sensors at once. Our project aims to build an out-of-box toolkit to do such extrinsic calibration.

System Description

Hardware preparation on Mapping Robot II

Mapping Robot II has 4 Lidars in total. 2 of them are of model RS-Bpearl while the other 2 are of model RS-Ruby. All the Lidars are connected with ethernet cables to the main computing node through a dedicated network switch. Each Lidars is configured to have its own IP address and send point cloud data to the main computing node through a dedicated port. Lidars receives GPS timestamp and Pulse-Per-Second signal through cables from the synchronization board. Main computing node receives and stores the point cloud data on its local SSD.

To get the exact pose of our robot, we also used the tracking system. By putting the markers on the robot, we can get high precision pose of such rigid body.

Calibration environment and Dataset recording

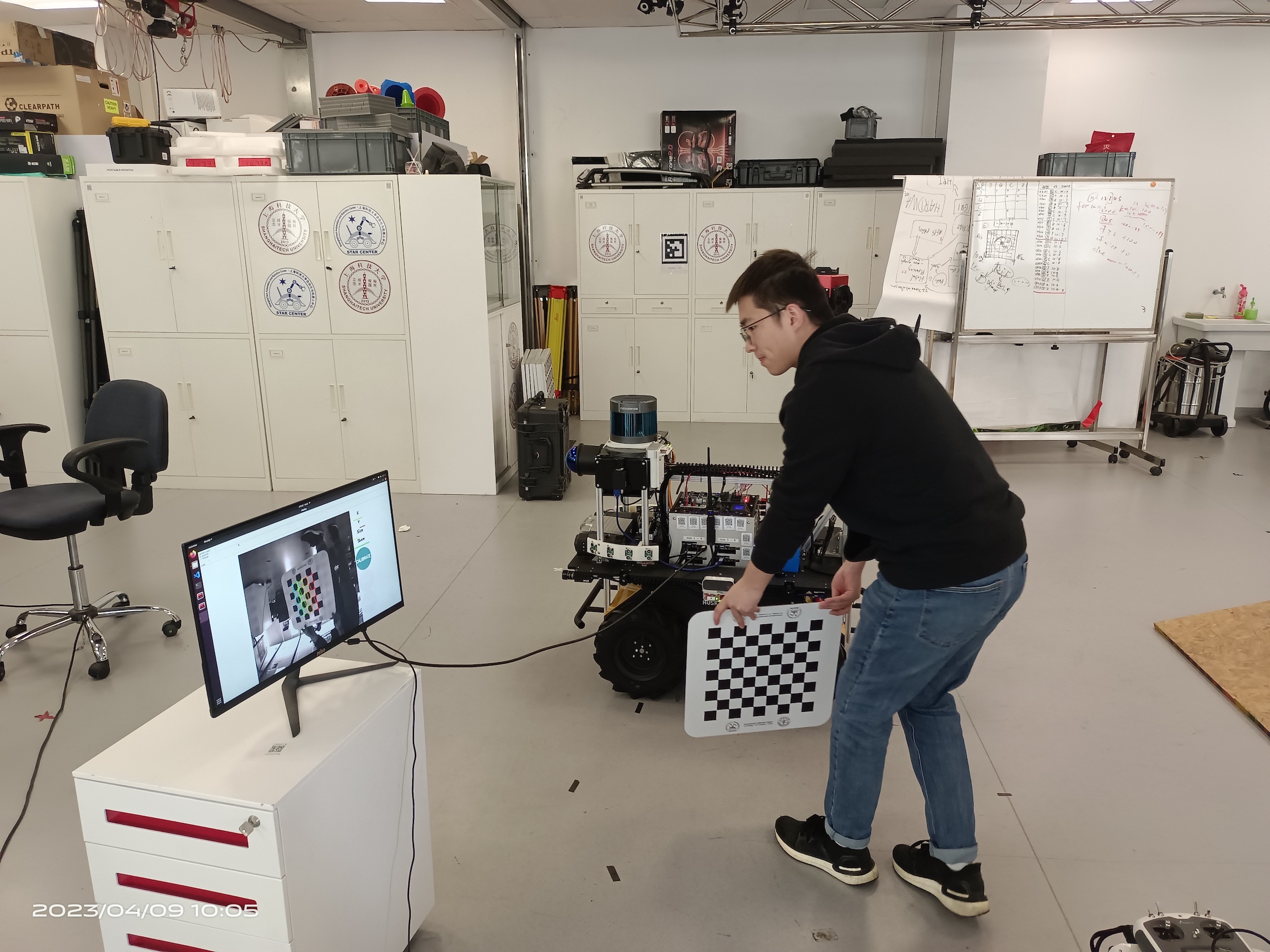

Our toolkit use AprilGrid target boards, which consist AprilTags arranged in a chessboard pattern and reflective tape on the edge of the board. The target boards should be placed evenly in the scene in order to be covered by the sensors with different orientation. Since there are cameras facing upward and LiDARs placed vertically, target boards hung above are also needed.

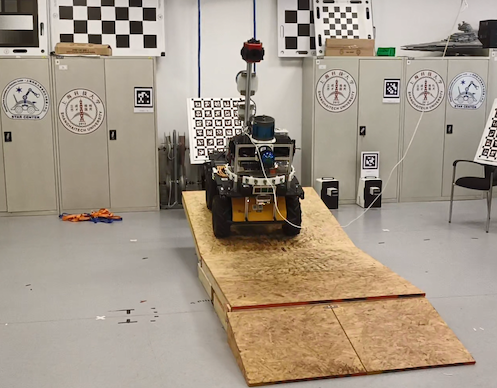

The robot should move around and even rotate or tilt in the scene so that the dataset will cover a variety of translation and rotation. A guidance on how to move the robot to meet the requirement.

Calibration Program

Before we calibrate the extrinsic parameters, we need to calibrate the intrinsics of all cameras. Here we used the zhang's method.

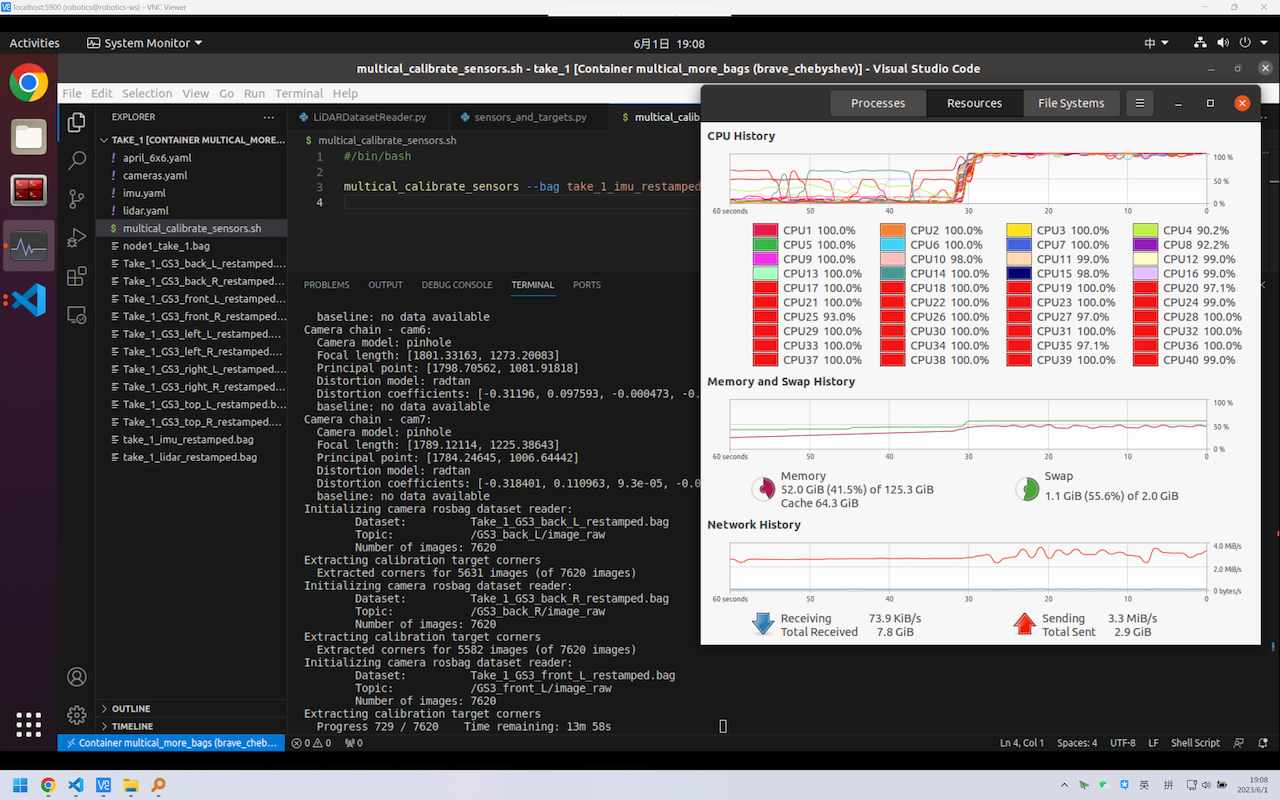

The calibration program will use the collected sensor data as well as the intrinsic of the sensors to calculate the extrinsic using the method introduced in Multical: Spatiotemporal Calibration for Multiple IMUs, Cameras and LiDARs. An initial calibration using graph optimization is carried out first, then follows a further non-linear optimization.

System Evaluation

The calibration toolkit will be evaluated in the aspects of accuracy and ease of use.

The accuracy of the calculated extrinsic against ground truth, compared with state-of-the-art approaches, should be accurate enough.

The toolkit should have a user-friendly GUI. After the user specifies the dataset and intrinsic, it should carry out the calibration in an automatic style and require no human interference.

Video