A project of the Robotics 2020 class of the School of Information Science and Technology (SIST) of ShanghaiTech University. Course Instructor: Prof. Sören Schwertfeger.

Heng Zhang, Yuqi Pan, Chen Yu

Abstract

Collecting sample material for analysis is an important way to study an unknown area or even a planet, which typically requires hand-tuned control of a robotic manipulator. However, hand-tuning is not enough for exploring an unknown planet since the environment is usually unpredictable and hence the optimum controller is unknown in advance. In this work, our robot instead learns to scoop up sample material by try and error. We propose a reinforcement learning method with state representation directly from camera images and reward signal from the amount of sample being collected. Our robotic system can eventually perform a policy to collect samples from a sand dune efficiently using a shovel in an arbitrary environment.

Introduction

Such as NASA's Curiosity Mars rover is adding some sample-processing moves not previously used on Mars, sample material collection is one of the most important tasks for robots in space exploration. More generally, manipulation such as pushing and grasping is always is a crucial topic in the robotics community. However, up to now, the controllers of a manipulator are primarily designed and tuned by human engineers. This can usually lead to two problems. First, programming robots is a tiresome task that requires extensive experience and a high degree of expertise. Second, hand-tuned controllers have limited generability, and hence they can only finish the task in the specific environment. For example, it is difficult for a controller hard-coded for shoveling sand to pick up rocks. The motivation of this project is to use some learning-based methods such as reinforcement learning to help a robot to better adapt to a new environment. In this project, we successfully set up all the necessary software and hardware environments to achieve this task. First, the robot can map the environment with its LiDAR sensor. Then it can obtain the image with depth information from its stereo camera on the back. Finally, it will perform some algorithms to grab a bite of the dune, and collect the sample into a container with a manipulator.

System Description

Hardware

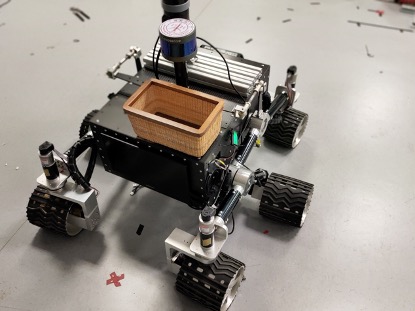

The physical structure of our project mainly consists of two parts: a mobile robot (Fig. 1) and a manipulator (Fig. 2) bound on it. The mobile robot has 6 wheels and 10 motors. 6 motors are set desired velocity to control the robot moving at a constant speed, and 4 motors are set desired position of the wheels to control the robot turning to target degree. We have an Intel NUC Mini PC and Raspberry Pi microcontroller installed in the body of the robot. In addition, a shovel is installed on the tip of the manipulator and a box is mounted on the mobile platform for sand collection. With all these installed, our tasks can be performed as shown in Fig.3.

Figure 1: A photo of our 6-wheel mobile platform.

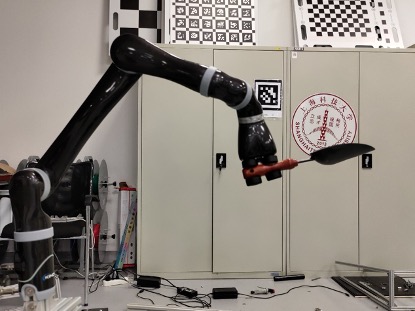

Figure 2: A photo of the manipulator mounted on the mobile platform.

Figure 3: A photo of our robot performing the shoveling task.

Software

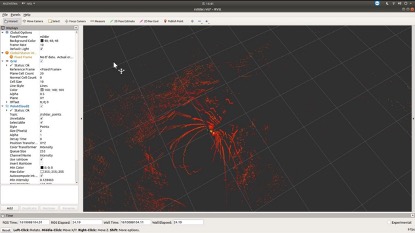

We visualise the point cloud generated by the LiDAR sensor and an image with depth information took from the Xtion stereo camera in Fig. 4 and Fig. 5. With both physical and software environments we have set up, other more complex algorithms can be easily implemented and more difficult tasked can be solved by this Rover robot.

Figure 4: A screenshot of point cloud generated by the LiDAR sensor.

Figure 5: A screenshot of image and depth information captured by the stereo camera.

Experiment

The test tasks will be divided into two steps:

•Experiment 1, the Rover can collect the information of obstacles on the path according to the Robosense and build a map. After that, the car can run smoothly from the starting point and take the robotic manipulator behind the Rover to the designated location for operation.

•Experiment 2, the robotic manipulator holds the shovel, but robot don’t know the height of sand, so we need depth camera to get it, and finally the sand in the shovel is poured into the installed collection container.

In this project, a Xtion camera will be used to get the height of sand. Then using ROS packages to drive the robotic manipulator to grasp sands. Having done this, the robot smoothly transports the sands to the specific place.

Conclusion

Overall, our project can be divided into three parts. One is mainly about using SLAM algorithms based on the 3D laser to generate a map and using some planning algorithms to drive and avoid obstacle outdoor autonomously. The other part is to use an RGB-D camera to detect objects such as shovels or even other tools. The last part is about scooping up samples from the sand dune with the tools. For the last two parts, we plan to deploy both non-learning-based methods and learning-based methods.

The work we have done in this direction includes setting up the physical platform and software environments and implementing some basic algorithms to finish the task. We installed a Kinova manipulator a six-wheel mobile platform with all are necessary devices, including a LiDAR sensor, a Raspberry Pi, an Intel NUC Mini PC, an ASUS Xtion PRO stereo camera, and two displays. We visualised the point cloud scanned by the LiDAR sensor, access the image with depth information from the stereo camera and controlled the manipulator to perform a pre-programmed policy to finish the shoveling task. With both physical and software environments we have set up, other more complex algorithms can be easily implemented and more difficult tasked can be solved by this Rover robot.

Video