A project of the Robotics 2020 class of the School of Information Science and Technology (SIST) of ShanghaiTech University. Course Instructor: Prof. Sören Schwertfeger.

Rui Li, Lin Li, Jianzhong Xiao

A robust 3D cotton point cloud Construction for Localization and Collision Detection Using RealSense camera

Abstract

Cotton is one of the most important economic crops in the world. Usually the cotton-picking robot would carry an RGB-D camera. The cotton robot can take many depth images from different point views. Basing on these depth images, we need to find the relative pose between views with respect to the global coordinate systems such that the over-lapping parts between point clouds match as well as possible. Finally, the registration procedure would generate a segmented point cotton which would annotate where the cotton is in the global coordinate system. Moreover, these points cloud would be processed to feed to the Moveit! using as the collision detection part.

Instruction

The cotton-picking robot would carry an RGB-D camera which is a 3D sensor simultaneously acquires color and depth images to forma 3D point cloud. The cotton robot can take many depth images from different point views. Based on these depth images, we need to find the relative pose between views with respect to the global coordinate systems such that the overlapping parts between point clouds matches well as possible. Finally, the registration procedure would generate a segmented point cotton which would annotate where the cotton is in the global coordinate system. Moreover, these points cloud would be processed to feed to the Moveit! using as the collision detection part.

System Description

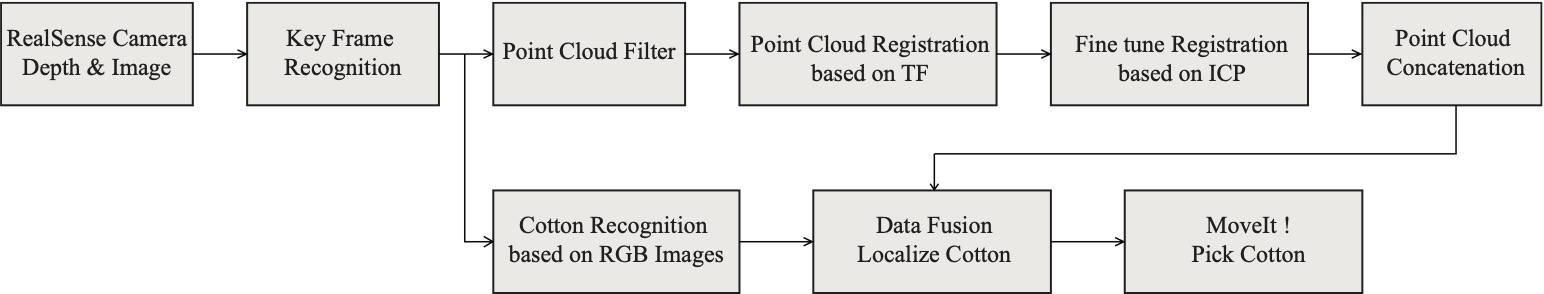

Figure 1: System & algorithm

Figure 1 shows the overall system-algorithm design of our cotton localization framework. The depth and RGB images are captured by RealSense Camera at a fixed rate (1 img/s in our design). Subsequently, the Key Frame Recognition module would analyze the key images/point-clouds and only perform transformation and stitching operations on these point clouds so as to make the final generated global point cloud map clear and accurate. Then, RGB images and point clouds are fed to the Cotton Recognition module and Point Cloud Filter module, respectively. The Point Cloud Filter module would remove the noise that existed in point clouds (e.g., outliers). On the other hand, the Cot-ton Recognition module finds the image coordinates of cotton. The point clouds are registered firstly by purely TF package (from camera_depth_optical_frame to Odom), subsequently fine-tuned by ICP algorithm. All transformed point clouds are concatenated to a final global point cloud map. This map combining with 2D image coordinates of cotton, is fed to the Data Fusion module to generate the real3D coordinates of cotton. Finally, the system calls MoveIt to pick cotton and move to the next position for cotton harvesting.

Experimental Result

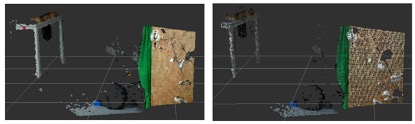

Figure 2: Point Cloud Filtering: Downsampling+Outlier removal

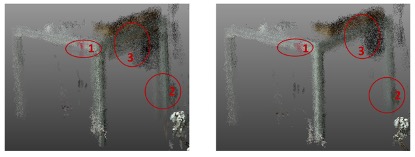

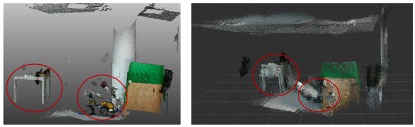

Figure 3: Fine tune the global point cloud with the ICP method; (a) shows the result of purely TF-based registration method; (b) shows the result of TF+ICP based registration method where point cloud are further aligned by the ICP method after the TF-based registration module.

Figure 4: Cotton Recognition; (a) RGB image from real world;(b) Result from cotton recognition

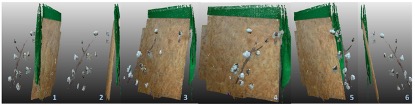

Figure 5: Global point cloud example: cotton model

Figure 6: (a) Optimized method (b) direct method

Video:

Conclusion

In this project, we make a cotton localization system. The cotton robot can take many depth images from different point views. Based on these depth images, the system needs to find the relative pose between views with respect to the global coordinate systems such that the overlapping parts between point clouds match as well as possible. Finally, the registration procedure would generate a segmented point cotton which would annotate where the cotton is in the global coordinate system. We implement a filter to reduce outliers and compress the point cloud and then we make use of ICP algorithm to fine tune the transformation matrix derives by the robot. After those modules, we can reconstruct a3D scene nicely and with less noise.