A project of the Robotics 2020 class of the School of Information Science and Technology (SIST) of ShanghaiTech University. Course Instructor: Prof. Sören Schwertfeger.

Chenyang Zhang, Derun Li, Tiansu Chen

Abstract

This project is aiming at operating colorization on point cloud obtained by LiDAR device given images information along with the scanning. The whole process is divided into three stages and output a clear colored point cloud with less noise. The first stage is to filter points based on neighborhood range centered at camera position using Octree and Voxel. The second part is to perfectly assign RGB value to a 3D point by projecting it to a 2D image plane based on the fisheye projection model and find the correlation with pixels, where depth checking of OpenGL and projection in OpenCV are used. Finally, a fusion of multi-sourced coloring results of a single point is considered., where three strategies are introduced, and averaging weighted on distances and angles provides the best result.

Introduction

Simultaneous localization and mapping (SLAM) is a popular 3D reconstruction method and enables efficient production of high-density 3D point clouds of real-world environments. However, the colorization of point cloud built by SLAM often leads to an unsatisfactory result. This project seeks an efficient, powerful, and convenient algorithm to color the point cloud based on the video shot by the attached camera. The process of colorization will make the point cloud more recognizable and nice-looking. Meanwhile, it is more convenient and easy to apply reconstruction or recognition algorithms on a well-colored point cloud.

System Description

Algorithm Overview

The coloring process can be divided into _ parts:

-Point Filter: Selecting a proper scale of points for coloring.

-Colorization Based on Single Image: Coloring a point by a single image.

-The fusion of Multi-sourced Colorization: Mixing color data from multiple images for one point.

Point Filter

To colorize the points, the first step is filtering the point cloud and select the points to be colorized with respect to each camera frame.

We first build the OctoMap of the point cloud to divide the point cloud into small voxels. Then we find the voxels whose center is within a certain distance to the coordinate of the lidar for each frame. We record the center of each voxel and build an octree so that we can find several neighbor voxels in each iteration.

In this method, the points are organized and colored in a voxel and the colored point cloud can be composed of all these voxels without repetition. Moreover, the pre-divided voxels provide the probability of the parallelization of colorization.

Colorization Based on Single Image

We use Insta 360 ONE R as our RGB camera hardware and we use the fisheye camera model of OpenCV for simulation.

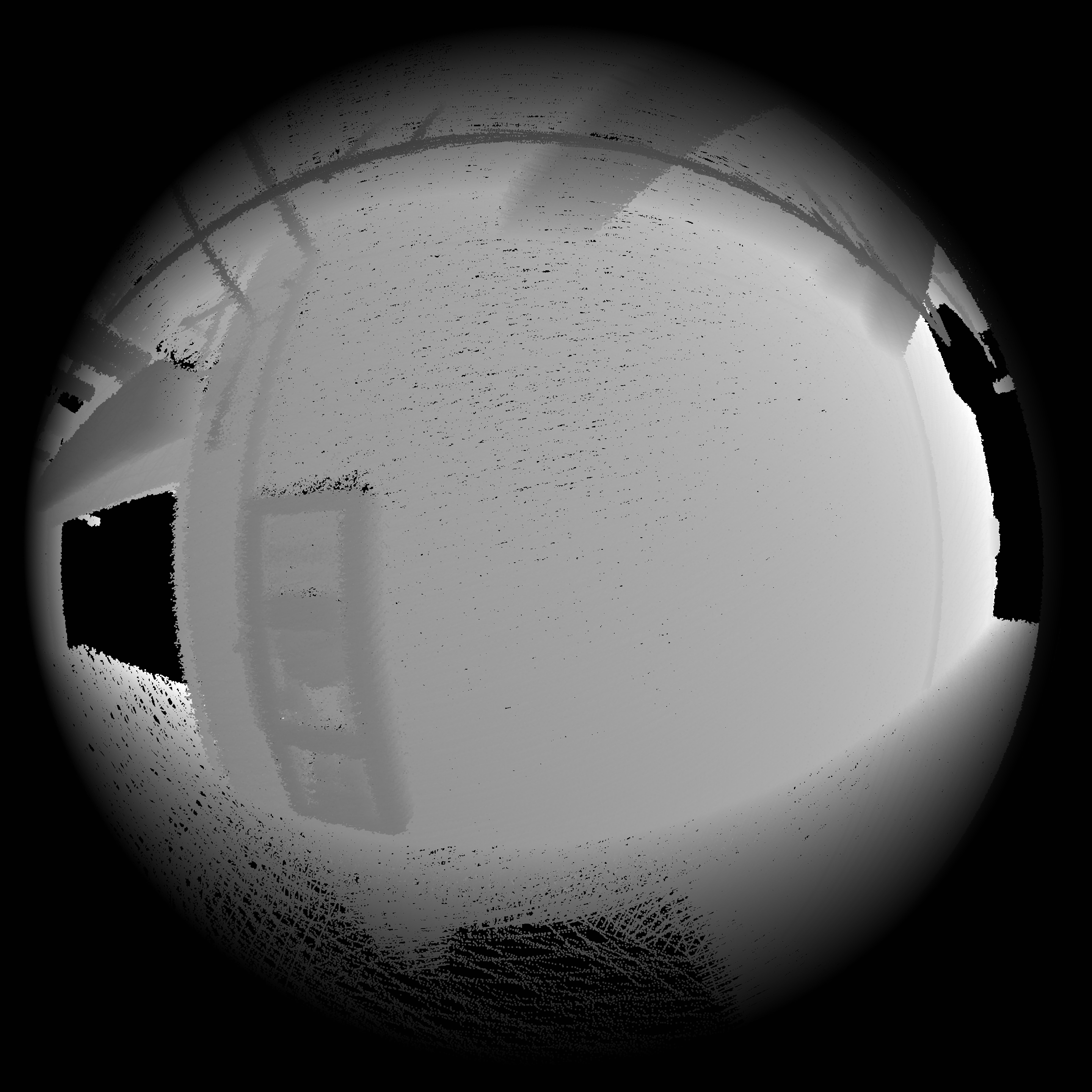

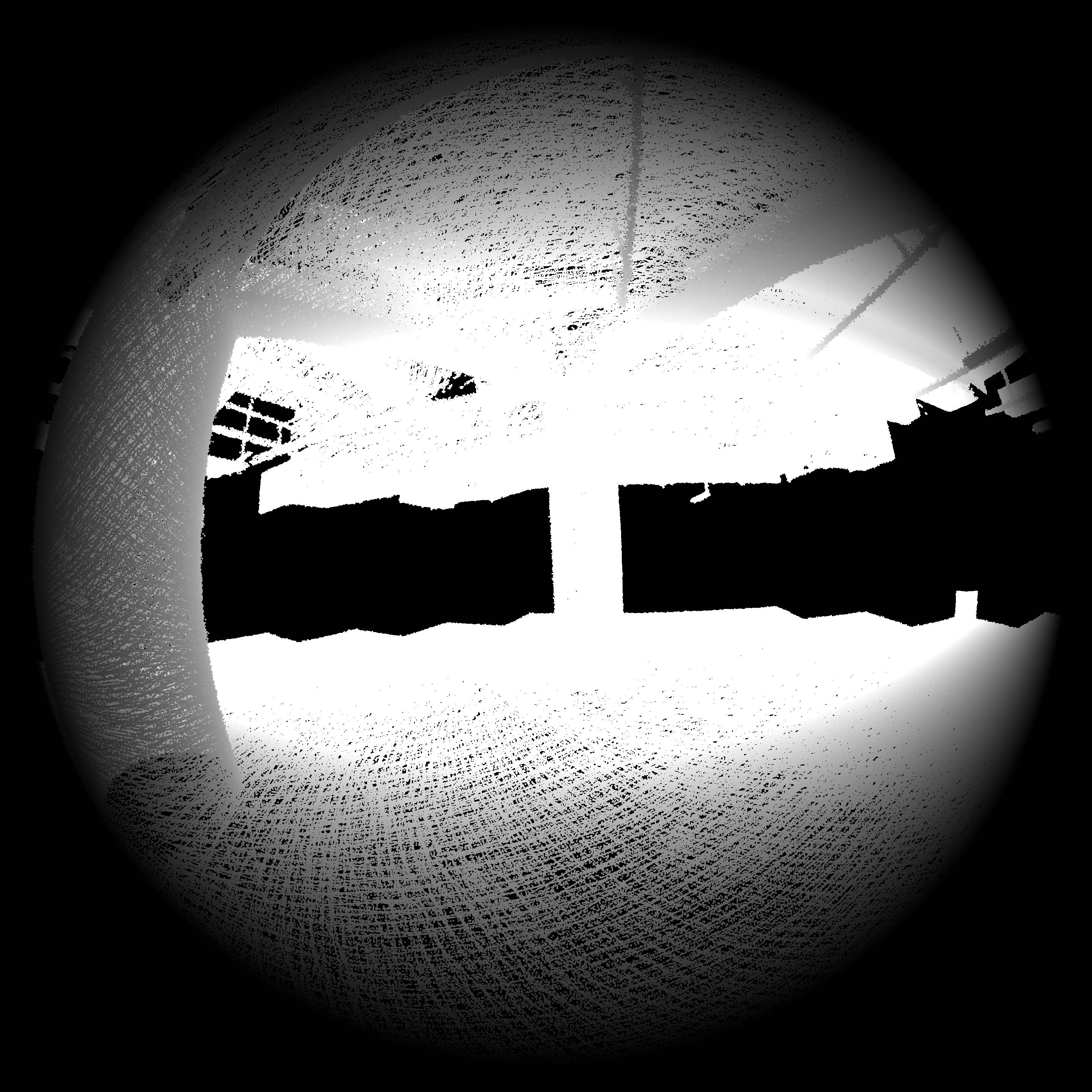

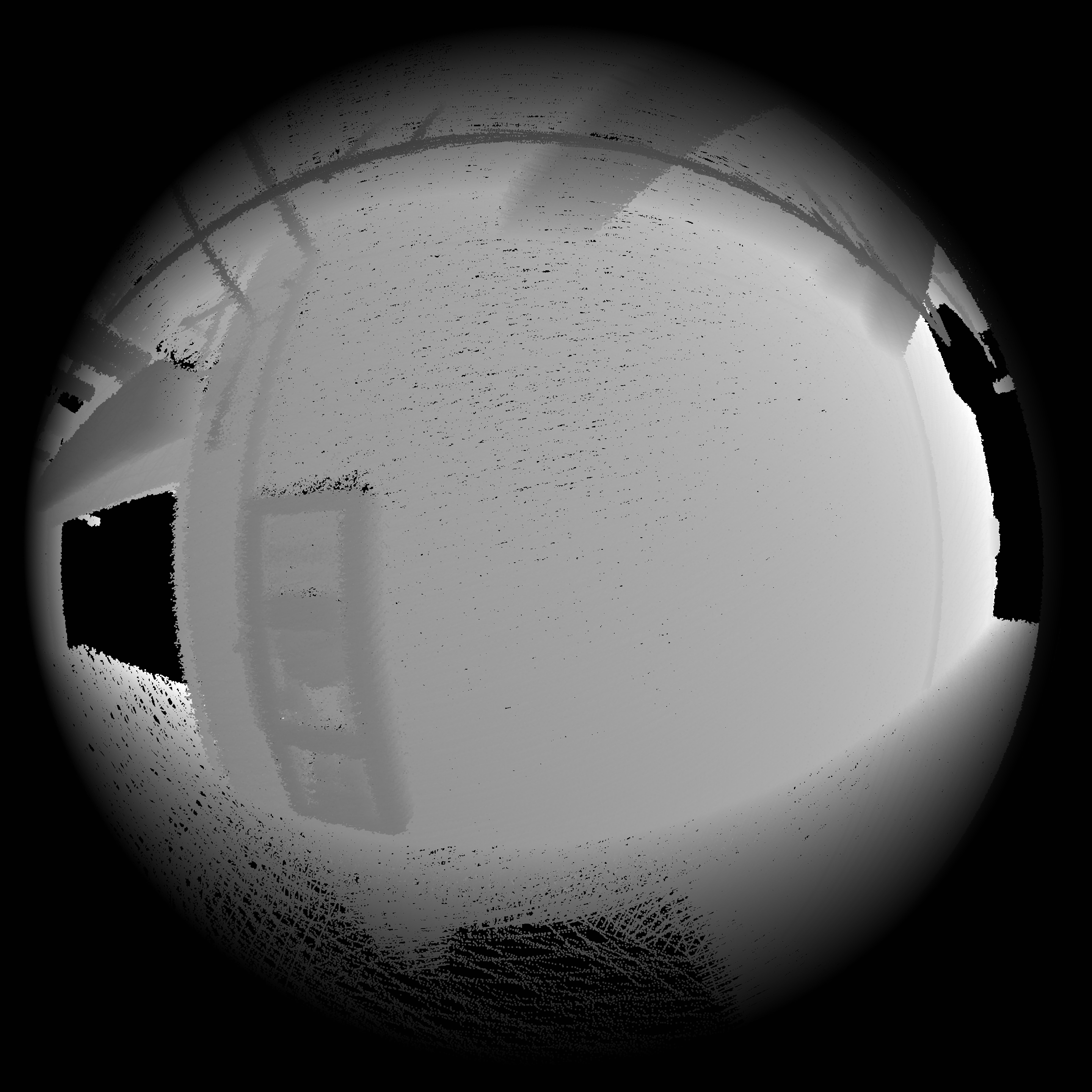

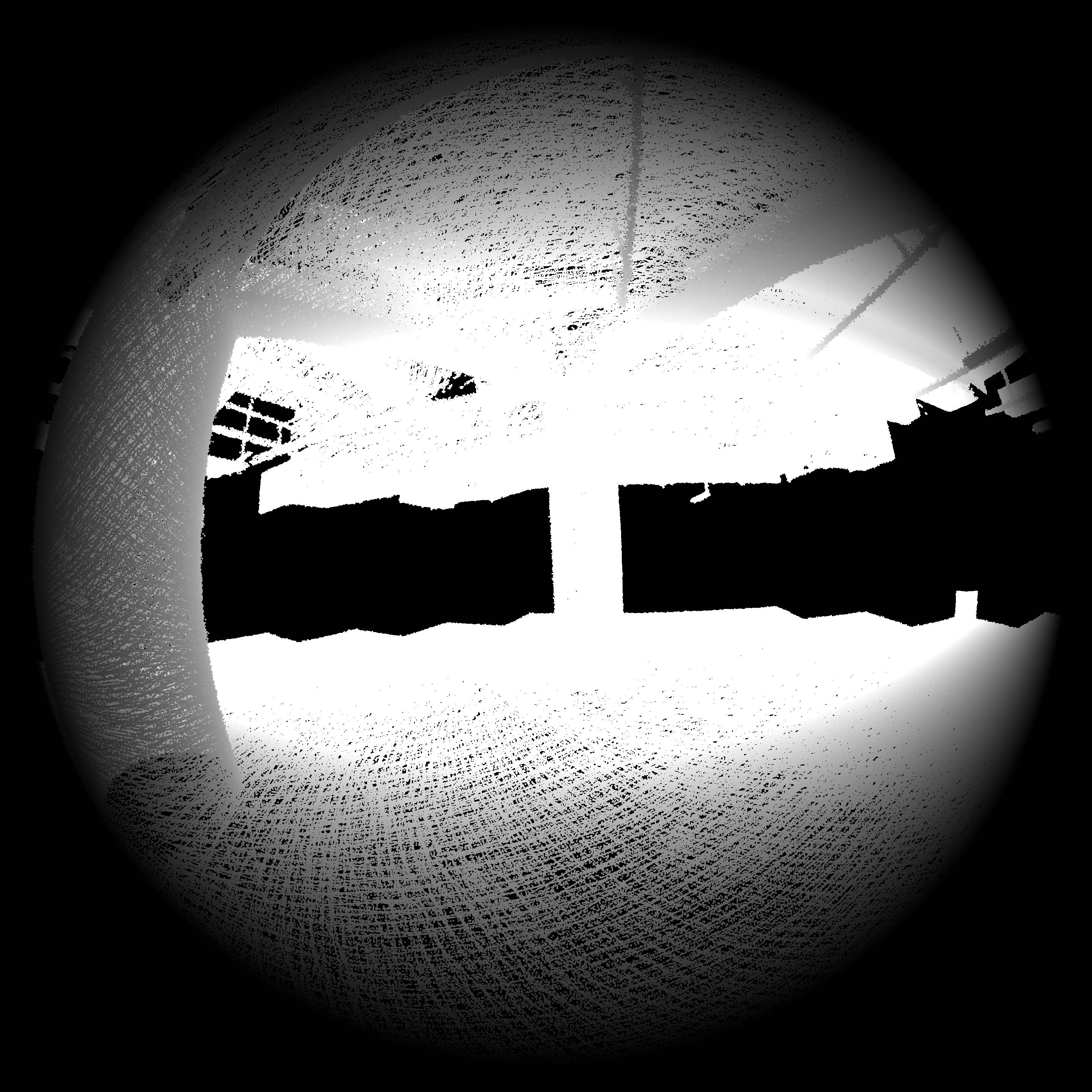

OpenGL projection provides an accurate depth map which indicates the nearest corresponding 3D point depth for each pixel, shown in Fig 1. This depth map generates a perfect depth checking function and successfully offers a solution for the ray-tracing problem. Then OpenCV gives out a convenient projection method without down-sampling. This pipeline results in a clear, dense, and not penetrated colorization.

Fig 1 Depth Map. Several depth image mapping of chosen frame in underground dataset introduced to reasonably merge the results in order to get a vivid colorization.

The fusion of Multi-sourced Colorization

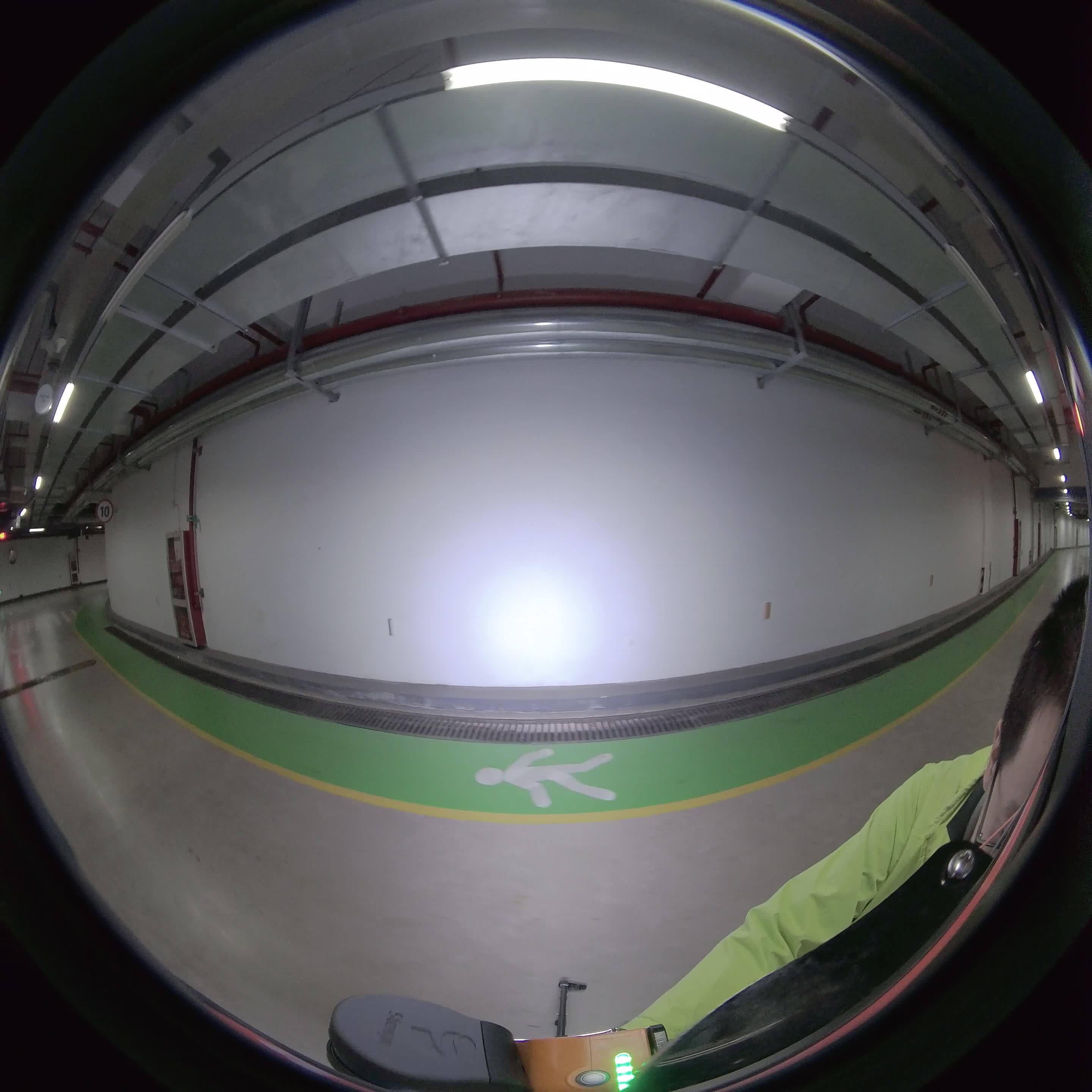

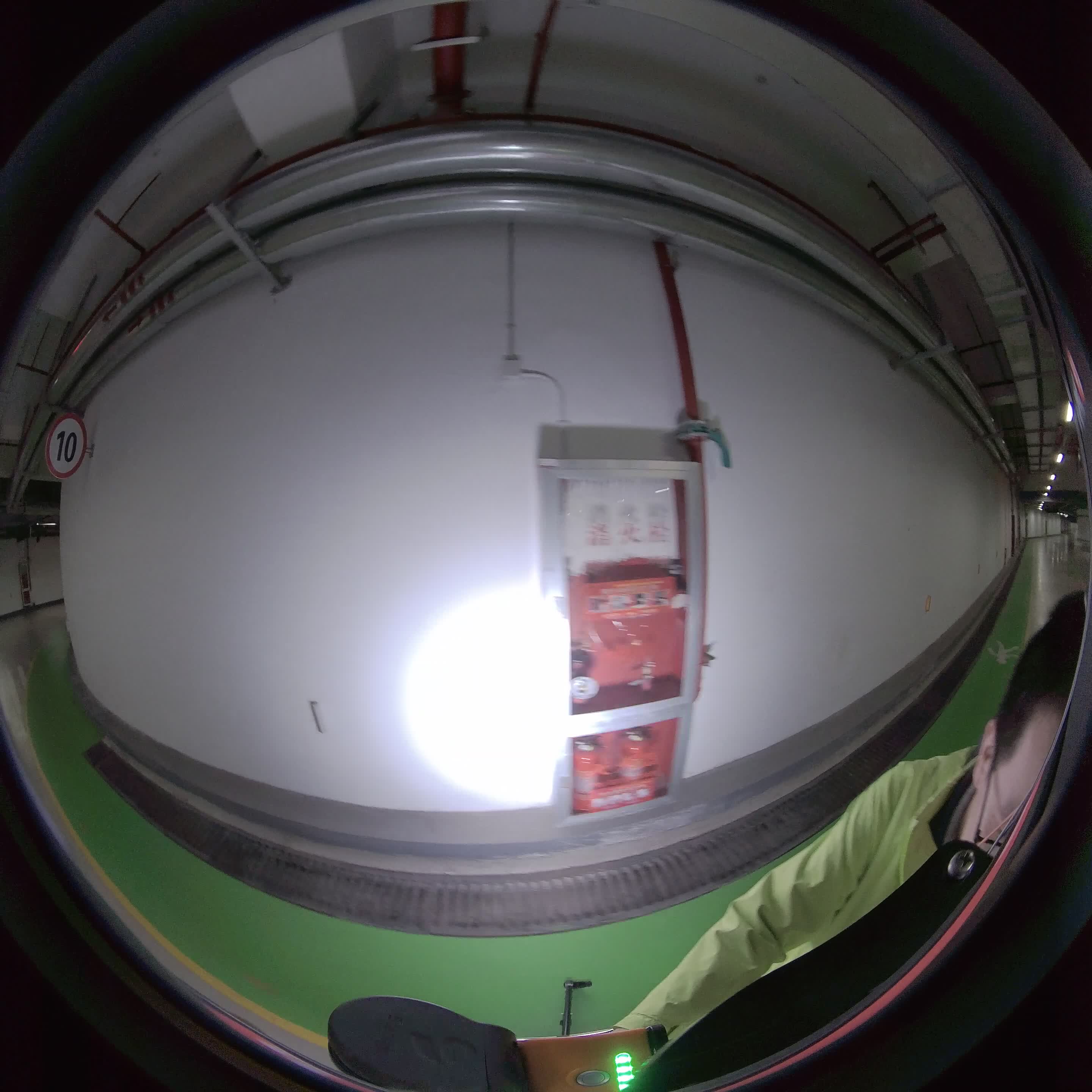

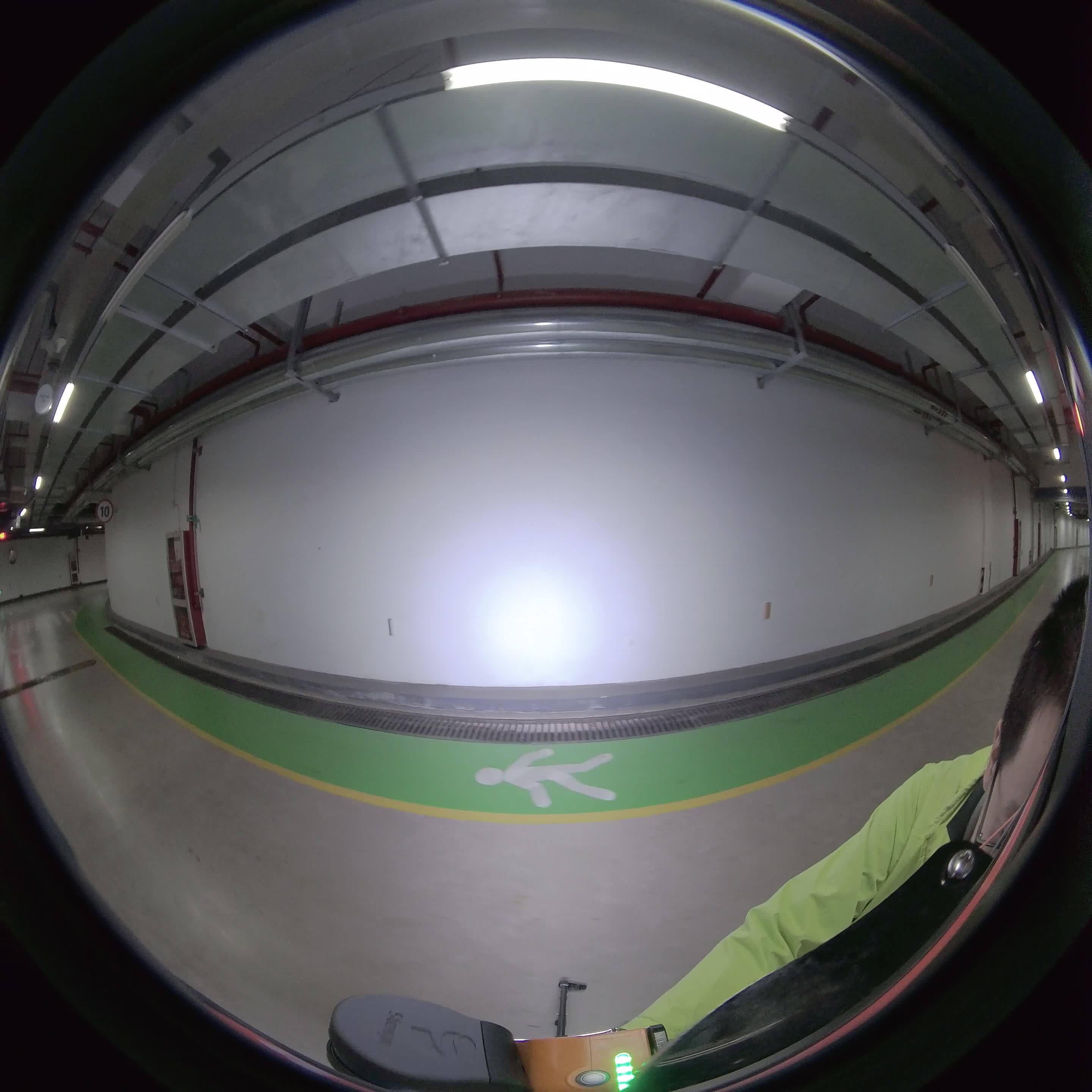

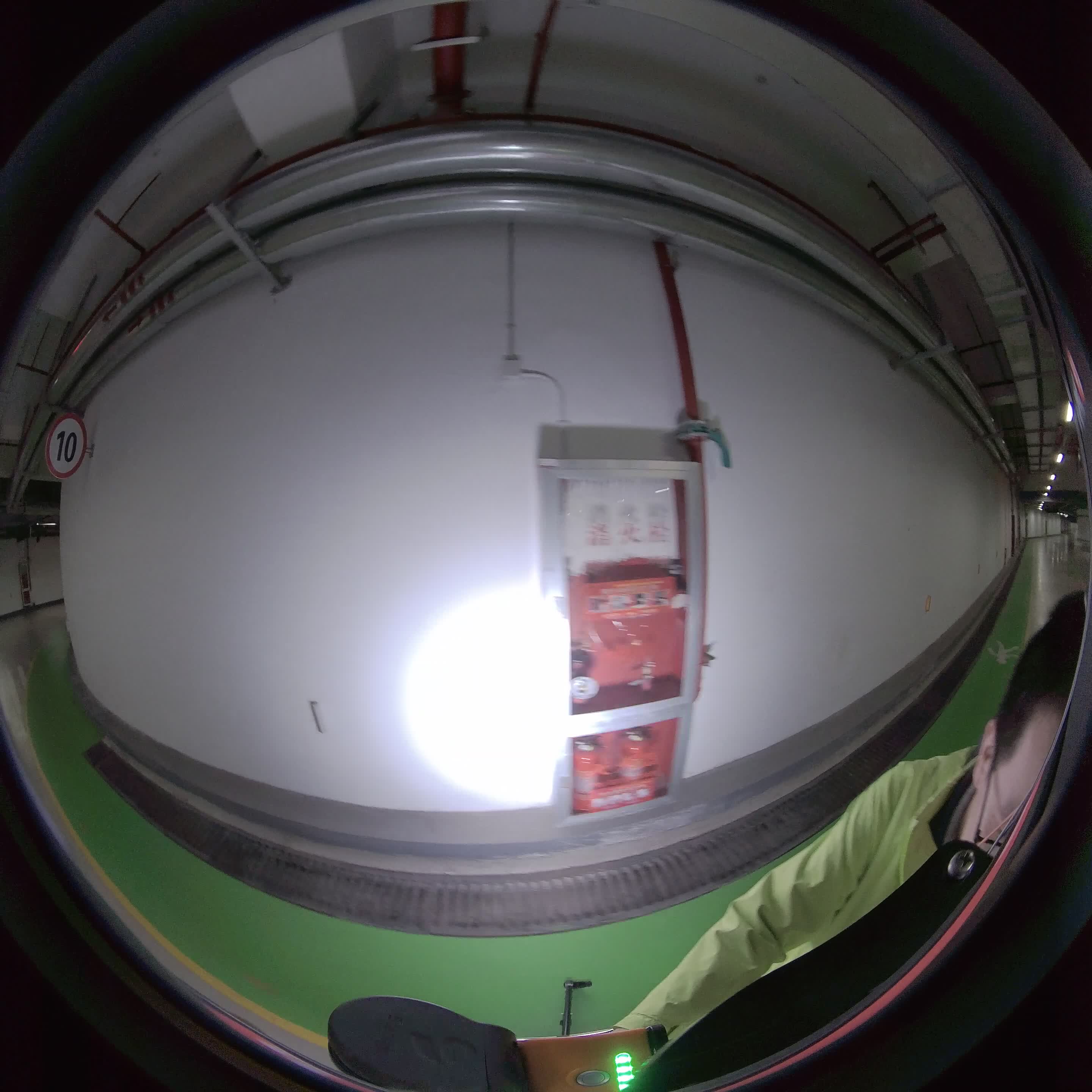

Fig 2 Multi-view Frames. The color of fire equipment on the wall changes

Due to the divide and conquer method used in the previous process, one single point will receive inferences of RGB property from multi-view images. Because of the field of view bounding, the angle between viewing ray and optical axis and environment (such as light, time) varieties, the color grasped in one frame may definitely differ from another view direction. For example, in Fig 2, due to the strong light from the spotlight attached to the device, the forward wall is white but the fringe is gray. Such that, a functional approach need to be introduced to reasonably merge the results in order to get a vivid colorization.

Hardware

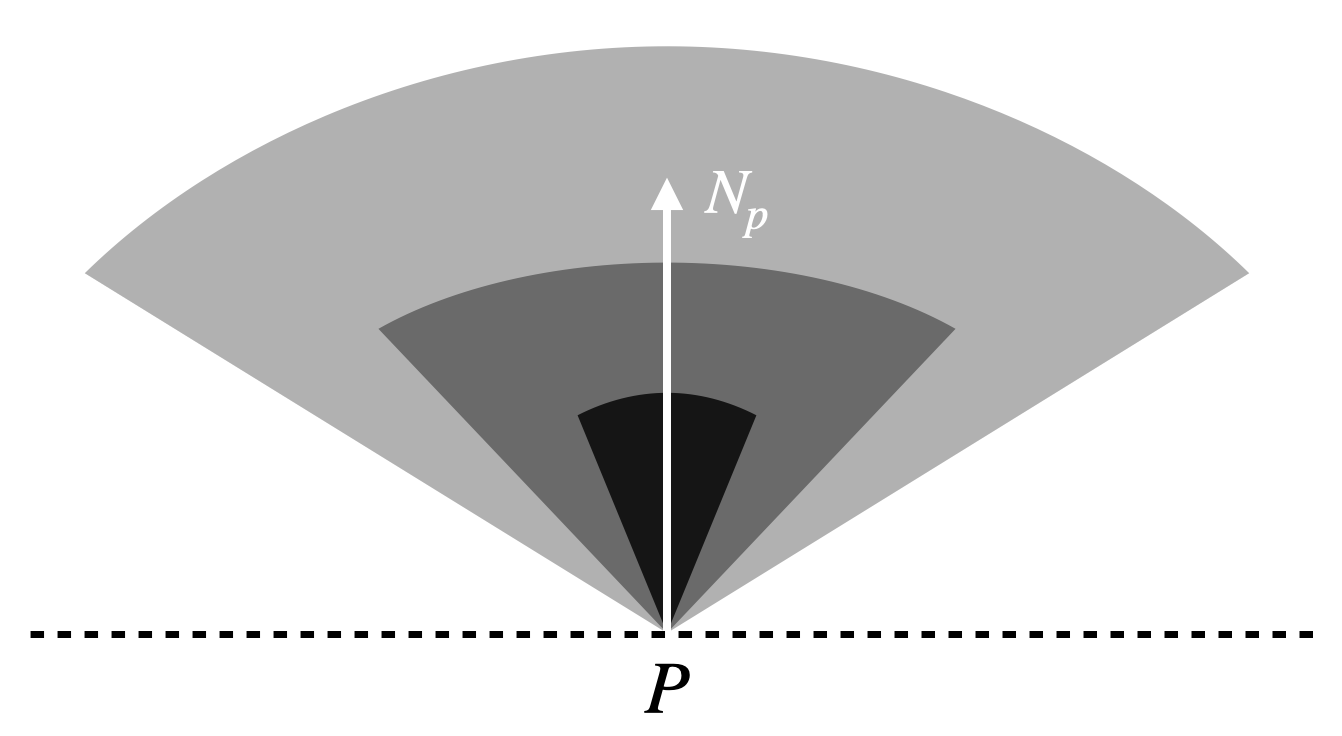

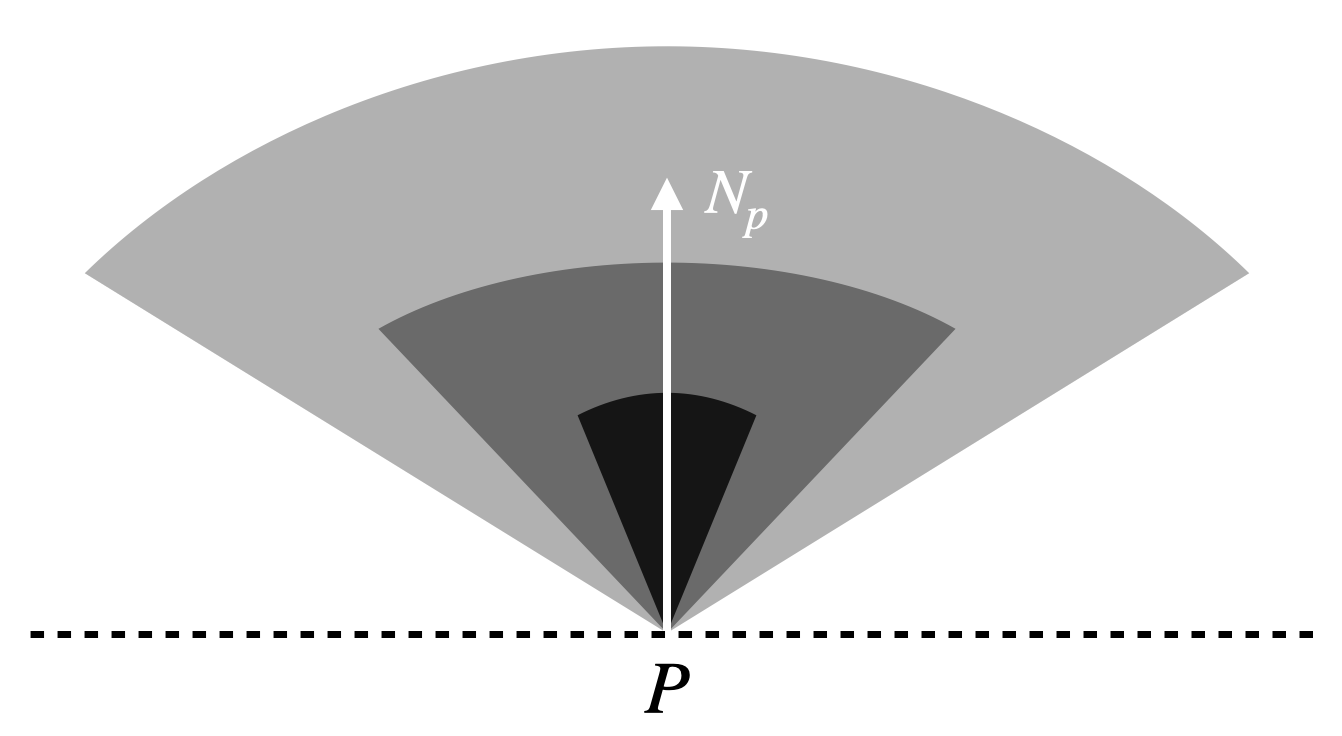

Fig 3 Weight Field. The inner sector is given the larger weigh.

Fig 4 Hardware. The sensor pod consists of two fish eye cameras and one LiDAR sensor, collect 3D points and RGB images simultaneously.

Our data are collected by a sensor pod (as shown in Fig 4) which has two fisheye cameras and one LiDAR sensor. The calibration work has been done so proper intrinsic and extrinsic are provided.

Result

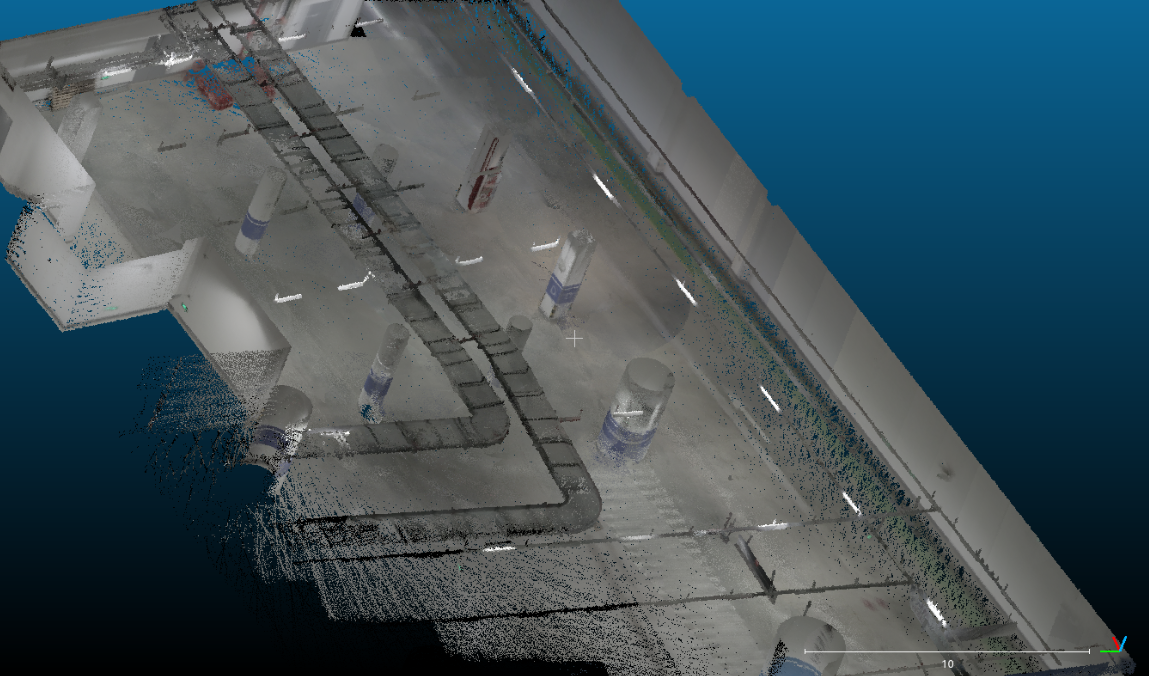

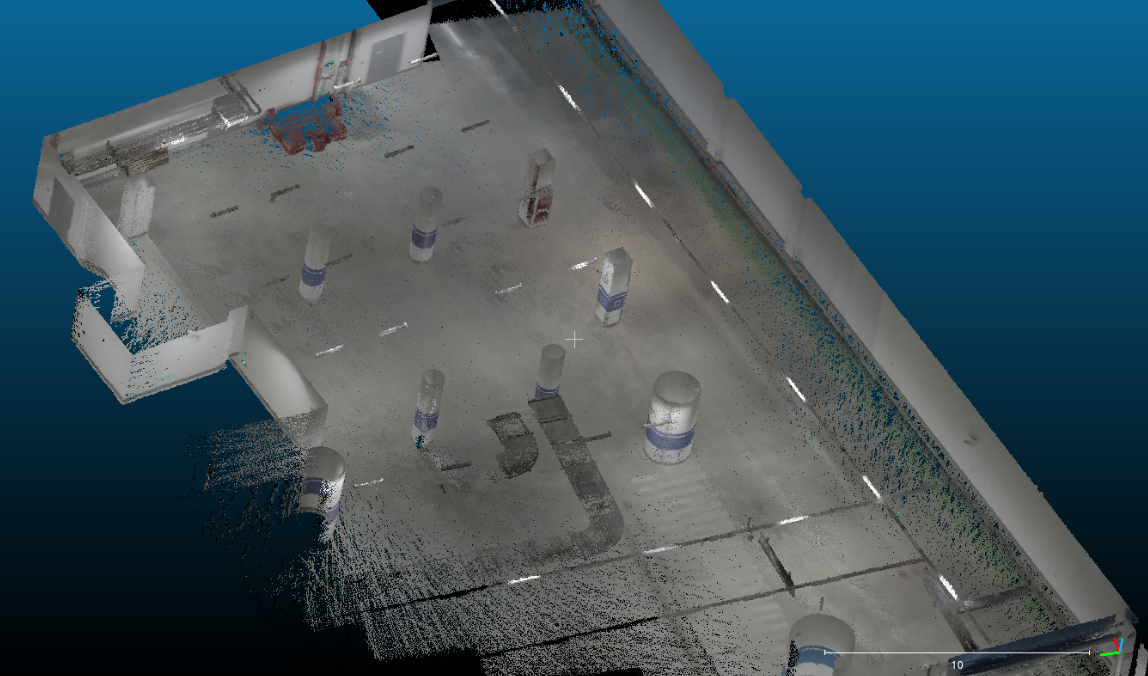

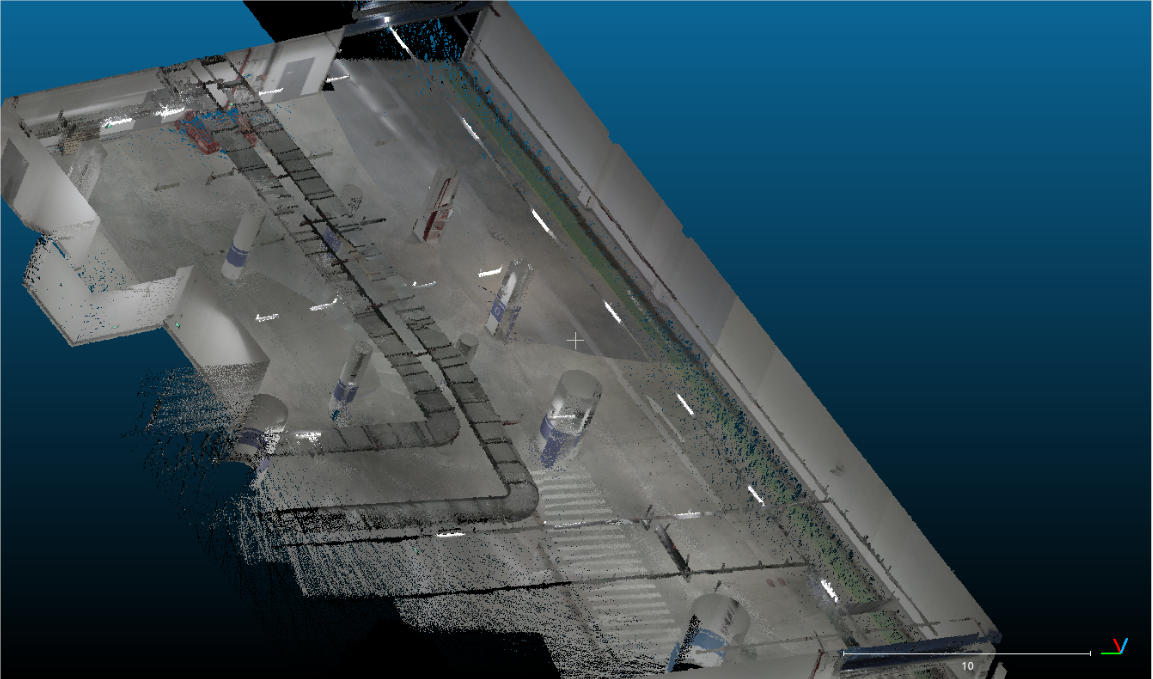

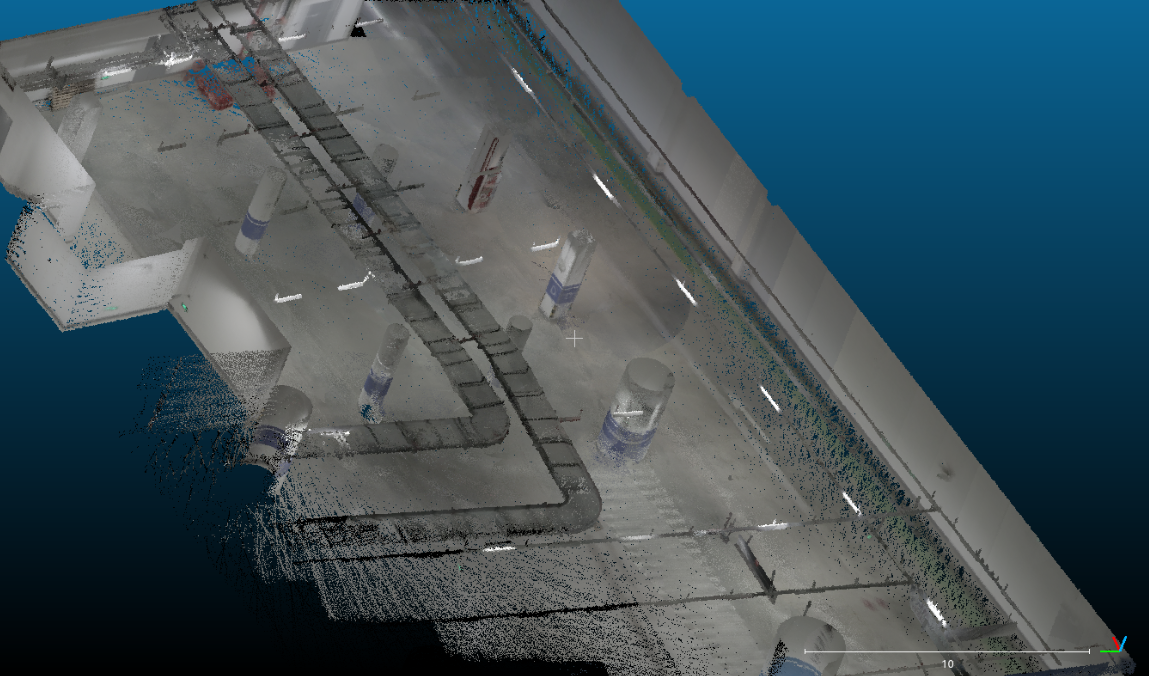

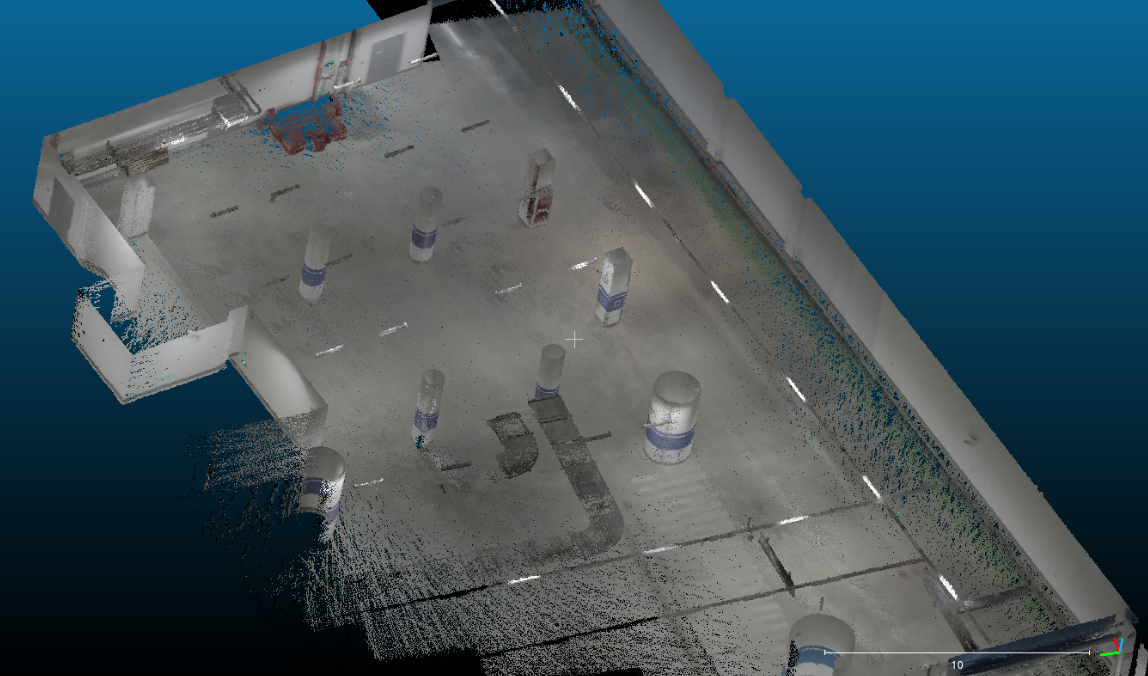

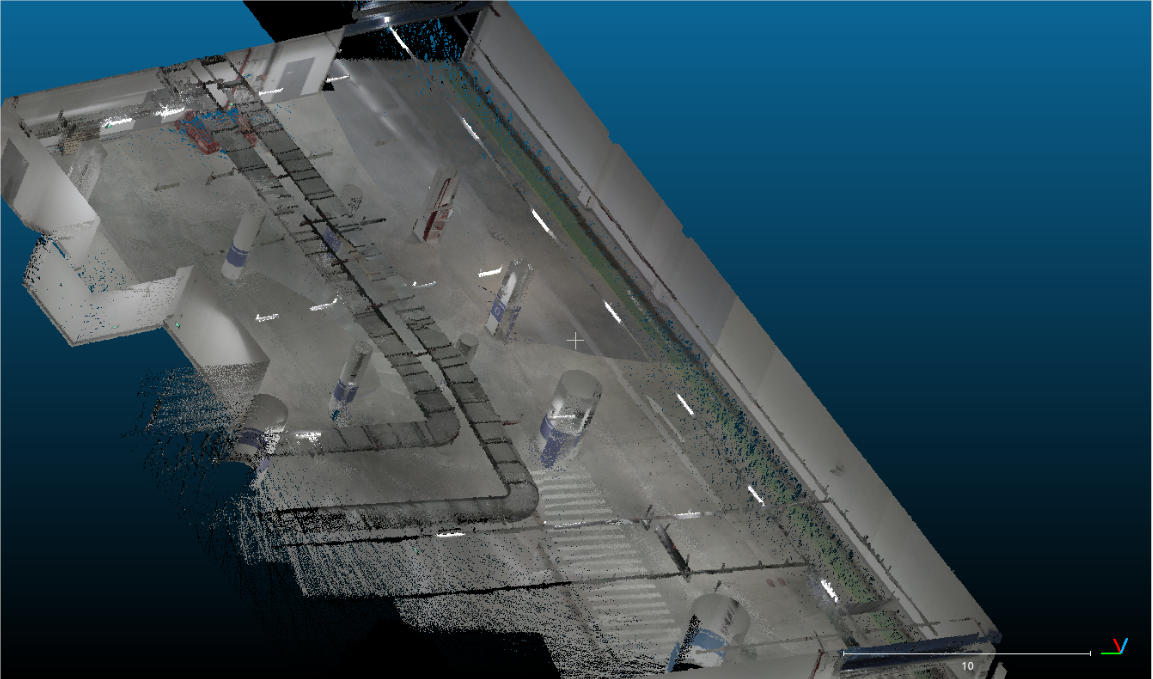

The result is shown in Fig 5 It can be seen from the figures that the colorization is good enough to easily recognize the objects and doing plane detection, while the slight difference is shown in details such as edge clearness and plane integrity among the

three fusion strategies.

Fig 5 Final Results. Colorization results by different method

System Evaluation

Statistically, we introduce two evaluation systems:

1.The re-project results in a 2D image and aligns with the original one to check correlations.

2.Select a purely colorized range of objects and check for wrongly colored points to comput

A project of the Robotics 2020 class of the School of Information Science and Technology (SIST) of ShanghaiTech University. Course Instructor: Prof. Sören Schwertfeger.

Chenyang Zhang, Derun Li, Tiansu Chen

Abstract

This project is aiming at operating colorization on point cloud obtained by LiDAR device given images information along with the scanning. The whole process is divided into three stages and output a clear colored point cloud with less noise. The first stage is to filter points based on neighborhood range centered at camera position using Octree and Voxel. The second part is to perfectly assign RGB value to a 3D point by projecting it to a 2D image plane based on the fisheye projection model and find the correlation with pixels, where depth checking of OpenGL and projection in OpenCV are used. Finally, a fusion of multi-sourced coloring results of a single point is considered., where three strategies are introduced, and averaging weighted on distances and angles provides the best result.

Introduction

Simultaneous localization and mapping (SLAM) is a popular 3D reconstruction method and enables efficient production of high-density 3D point clouds of real-world environments. However, the colorization of point cloud built by SLAM often leads to an unsatisfactory result. This project seeks an efficient, powerful, and convenient algorithm to color the point cloud based on the video shot by the attached camera. The process of colorization will make the point cloud more recognizable and nice-looking. Meanwhile, it is more convenient and easy to apply reconstruction or recognition algorithms on a well-colored point cloud.

System Description

Algorithm Overview

The coloring process can be divided into _ parts:

-Point Filter: Selecting a proper scale of points for coloring.

-Colorization Based on Single Image: Coloring a point by a single image.

-The fusion of Multi-sourced Colorization: Mixing color data from multiple images for one point.

Point Filter

To colorize the points, the first step is filtering the point cloud and select the points to be colorized with respect to each camera frame.

We first build the OctoMap of the point cloud to divide the point cloud into small voxels. Then we find the voxels whose center is within a certain distance to the coordinate of the lidar for each frame. We record the center of each voxel and build an octree so that we can find several neighbor voxels in each iteration.

In this method, the points are organized and colored in a voxel and the colored point cloud can be composed of all these voxels without repetition. Moreover, the pre-divided voxels provide the probability of the parallelization of colorization.

Colorization Based on Single Image

We use Insta 360 ONE R as our RGB camera hardware and we use the fisheye camera model of OpenCV for simulation.

OpenGL projection provides an accurate depth map which indicates the nearest corresponding 3D point depth for each pixel, shown in Fig 1. This depth map generates a perfect depth checking function and successfully offers a solution for the ray-tracing problem. Then OpenCV gives out a convenient projection method without down-sampling. This pipeline results in a clear, dense, and not penetrated colorization.

Fig 1 Depth Map. Several depth image mapping of chosen frame in underground dataset introduced to reasonably merge the results in order to get a vivid colorization.

The fusion of Multi-sourced Colorization

Fig 2 Multi-view Frames. The color of fire equipment on the wall changes

Due to the divide and conquer method used in the previous process, one single point will receive inferences of RGB property from multi-view images. Because of the field of view bounding, the angle between viewing ray and optical axis and environment (such as light, time) varieties, the color grasped in one frame may definitely differ from another view direction. For example, in Fig 2, due to the strong light from the spotlight attached to the device, the forward wall is white but the fringe is gray. Such that, a functional approach need to be introduced to reasonably merge the results in order to get a vivid colorization.

Hardware

Fig 3 Weight Field. The inner sector is given the larger weigh.

Fig 4 Hardware. The sensor pod consists of two fish eye cameras and one LiDAR sensor, collect 3D points and RGB images simultaneously.

Our data are collected by a sensor pod (as shown in Fig 4) which has two fisheye cameras and one LiDAR sensor. The calibration work has been done so proper intrinsic and extrinsic are provided.

Result

The result is shown in Fig 5 It can be seen from the figures that the colorization is good enough to easily recognize the objects and doing plane detection, while the slight difference is shown in details such as edge clearness and plane integrity among the

three fusion strategies.

Fig 5 Final Results. Colorization results by different method

System Evaluation

Statistically, we introduce two evaluation systems:

1.The re-project results in a 2D image and aligns with the original one to check correlations.

2.Select a purely colorized range of objects and check for wrongly colored points to compute the accuracy.

The accuracy can reach above 95% based on method 2.

Conclusion

Point cloud colorization makes use of knowledge in many different areas such as Computer Vision and Robotics. Get inspired by the colorization works based on multi-view static photos, we reasonably split the whole point cloud into mini space of the current camera FOV using Octree search and Voxel container. Using PCL and Eigen, we learn how to do calculations based on octree to search for node neighbors and set the focus domain, after which 3D to 2D projection and colorization can be done by applying the fisheye projection model to OpenGL and OpenCV. The result point cloud can be obtained with clear, well arranged, and vivid rendering colors after projection, alignment, and fusion. One step forward, maybe motion detection needs to be appended to deblur the indistinct area.

Video

e the accuracy.

The accuracy can reach above 95% based on method 2.

Conclusion

Point cloud colorization makes use of knowledge in many different areas such as Computer Vision and Robotics. Get inspired by the colorization works based on multi-view static photos, we reasonably split the whole point cloud into mini space of the current camera FOV using Octree search and Voxel container. Using PCL and Eigen, we learn how to do calculations based on octree to search for node neighbors and set the focus domain, after which 3D to 2D projection and colorization can be done by applying the fisheye projection model to OpenGL and OpenCV. The result point cloud can be obtained with clear, well arranged, and vivid rendering colors after projection, alignment, and fusion. One step forward, maybe motion detection needs to be appended to deblur the indistinct area.

Video