A project of the Robotics 2020 class of the School of Information Science and Technology (SIST) of ShanghaiTech University. Course Instructor: Prof. Sören Schwertfeger.

Wang Youcheng, Zou Lu

Introduction

Nowadays, mobile phones have become an indispensable personal item for every-one, bringing us a lot of convenience. AR technology is a relatively new technical con-tent that promotes the integration of real world information and virtual world infor-mation content. The combination of augmented reality (AR) technology and mobile phones can bring new solutions to some specific scenarios and improve users experi-ence. For example, we can obtain real-time model rendering information of the real sce-ne by taking pictures of mobile phones.

Point cloud registration realizes the conversion between the point set and another point set coordinate system through the correlation between each point in one point set (target) and the corresponding point in another point set (original point set), and realizes registration . Unity 3D is a software that can make and render 3D models.

System Description

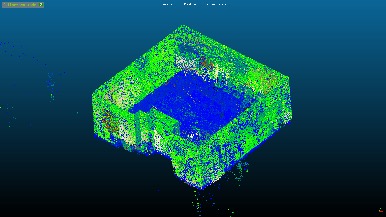

First we use 3D laser scanner to collect point cloud data of one room, the we use the iPad to scan a random scene in that room for the point cloud registration and ren-dering 3D model in Unity. The image of iPad scan room and the pcd file opened in cloud compare is shown in Figure. Although there are many approaches to process point cloud registration, we are responsible for finding a fast and robust algorithm. At the same time, we are supposed to render a good 3D model in unity for applying this technology to more applications.

Feature extraction

3D point cloud feature description and extraction are the most basic and critical part of point cloud information processing, point cloud recognition. Most algorithms such as segmentation, resampling, and registration surface reconstruction depend heavily on the results of feature description and extraction. From the scale, it is generally divided into the description of local features and the description of global features. For example, the description of geometric shape features such as local normals and the description of global topological features belong to the category of 3D point cloud feature description and extraction.

Point cloud registration

Point cloud registration can be understood as a process of obtaining a perfect coor-dinate transformation through calculation, and integrating point cloud data from different perspectives into a specified coordinate system through affine transformation. Figure 3 shows that two point cloud sets. Generally speaking, the two point clouds for registration can be completely overlapped with each other through such position transformations as rotation and translation. Therefore, the two point clouds are rigid transformations, that is, the shape and size are exactly the same, but the coordinate positions are different. Point cloud registration is to find the coordinate position transformation relationship between two point clouds. Then we briefly introduce three point cloud registration algorithms, namely ICP, NDT and TEASER++.

System Evaluation

In the process of testing the system, we need to use point cloud registration to evaluate the accuracy of the pose. We use iPad to obtain new point cloud, and register it with the point cloud data of a room obtained from the geoslam scanner to verify whether we can render a model with the same coordinates as before.

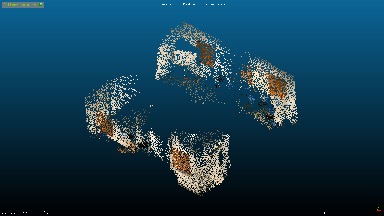

Through our experiment, we can align the point cloud data obtained from iPad scanner with the point cloud data of the who room successfully. Figure 9 shows the point cloud data of the corners of a room, Figure 10 shows the registration result of the local point cloud data and the global point cloud data.

Conclusions

In our work, during our early stage, we done the corresponding project preparation work, read the papers related to the phone AR project, during the mid-stage, learned some point cloud registration algorithms and point cloud feature methods, opengl and Unity 3D related theoretical knowledge. At the final stage, we devote ourselves to the project, apply our knowledge to the project. And we implement the phone AR project finally. According to our experiment result, we can know that the system can work well but still has many aspects to improve. In our future work, we have built a point cloud of a building. By shooting the point cloud through the ipad to match, we can know which part of the building the currently shot scene is in, and then we can show other information about the current location, such as pipes, wires