A project of the Robotics 2019 class of the School of Information Science and Technology (SIST) of ShanghaiTech University. Course Instructor: Prof. Sören Schwertfeger.

Xiting Zhao , Zhijie Yang and Haochuan Wan

Abstract

In this project, we designed a new mapping robot with more sensors and more powerful computer. Designing, examining, simulation the architecture of different types of sensors and tightly synchronizing them can be challenging. Usages of the dataset generated by this new mapping robot are to evaluate SLAM algorithm and to establish high accuracy maps.

Introduction

For autonomous robots, the ability of getting from one place to another place is the most important capability. In order to realize this capability, Simultaneous Localization and Mapping (SLAM) system is often used and the result of SLAM is positively correlated with the quality of input data. To get better data, more sensors are used to collect information from environment. In our project, we installed an epic number of sensors, 10 RGB monocular cameras, 4 Lidars, 1 onmidirectional camera, 2 event cameras, 1 IMU and even IR cameras. However, as the number of sensors increases, the problem about synchronization between sensors is raised because synchronized system can lead sensors collect data at different time which results in errors in the generated map. In our project, we will migrate the existing synchronization on the existing mapping robot to the new one.

Examination and simulation is also an important issue for a newly-designed robot. To avoid repeatedly changing the architecture after the construction is done, we firstly visualize our design using computer aided design (CAD) software. Using the geometry information obtained from CAD schemes, we may generate precise urdf files and modify them to verify our robot¡¯s FOVs in gazebo.

Systen Description

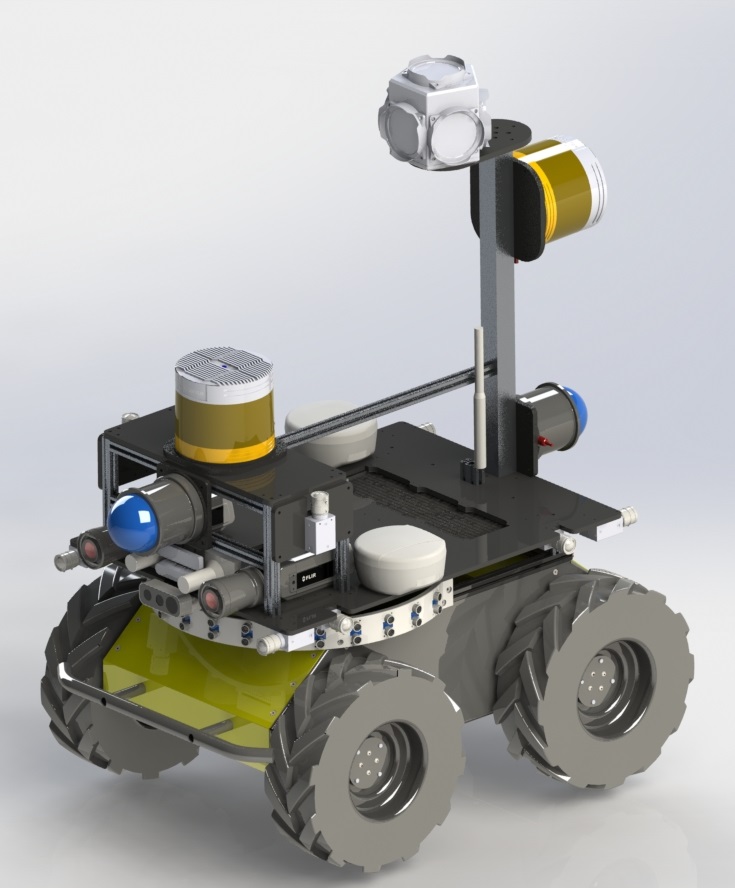

In the robot platform, we mount huge amounts of sensors. We have 10 PointGrey GS3 51S5C camera, which look at the front, rear, left, right and up stereo. We have two Robosense Bpearl Lidar on the front and rear. Each of the Bpearl Lidar has a 360¡ã¡Á90¡ã Super Wide FOV and have a low blind spot at 10cm. So we can get the FOV of the whole scene by the two Lidar. We have two Robosense Ruby 128 beam Lidar on the front vertically and on rear horizontally. We have a Panoramic camera PointGrey Ladybug5+ on the rear top of the robot, which can cover the 90% of full sphere. We also has 17 ultrasonic sensors in a circle and cover the front 270 degree. We also has stereo IR Thermal camera and stereo event based camera in the front. To have the accuracy ground truth, we also mount two differential GPS.

Model of the Mapping Robot II

Work in Progress