A project of the Robotics 2019 class of the School of Information Science and Technology (SIST) of ShanghaiTech University. Course Instructor: Prof. Sören Schwertfeger.

Ling Gao and Kun Huang

Abstract

We explore the feasibility of estimating the motion of a differential wheeled vehicle with a downward facing event-based camera alongside with a convenition camera that exerts fronto-parallel motion with respect to the ground plane. A basket of multi-channel datasets is recorded and could be used for further research.

Introdution

Our motivation is inspired by optical mouse sensors. The idea is that for any device that is being moved over a flat, planar surface, the velocity in the plane can simply be measured by an optical sensor that directly faces the plane. As the device is moving, the displacement information is deduced from the apparent motion of brightness patterns in the perceived image. This turns the motion estimation into a simple image registration problem in which we only have to identify a planar homography. Another important motivation for a downward facing sensor is that the depth and structure of the scene will be known in advance, and that---as a result---the motion of patterns in the image under planar displacement can be described by a simple Euclidean transformation. However, even though this estimation problem appears to be simple, it is difficult to find point correspondences between subsequent images as the perceived floor texture often does not lead to distinctive, easily matchable keypoints, especially suffers from the low-light enviroment and motion blur. As a compensation, event camera will survive because of its high dynamic range and low latency.

System Description

Data acquisition is an essential step among all procedures when conducting a scentific experiment. As suggested and guided, to suit different scenarios, we mount several sensors on the differential wheeled robot, including Velodyne Lidar, the conventional RGB camera with high resolution, dynamic vision sensor integrated with event camera, IMU (Inertial Measurement Unit) and a low resolution monochromatic camera, also aiding by OptiTrack System for high precision ground truth. A photograph of the robot is captured and presented in Figure 1.

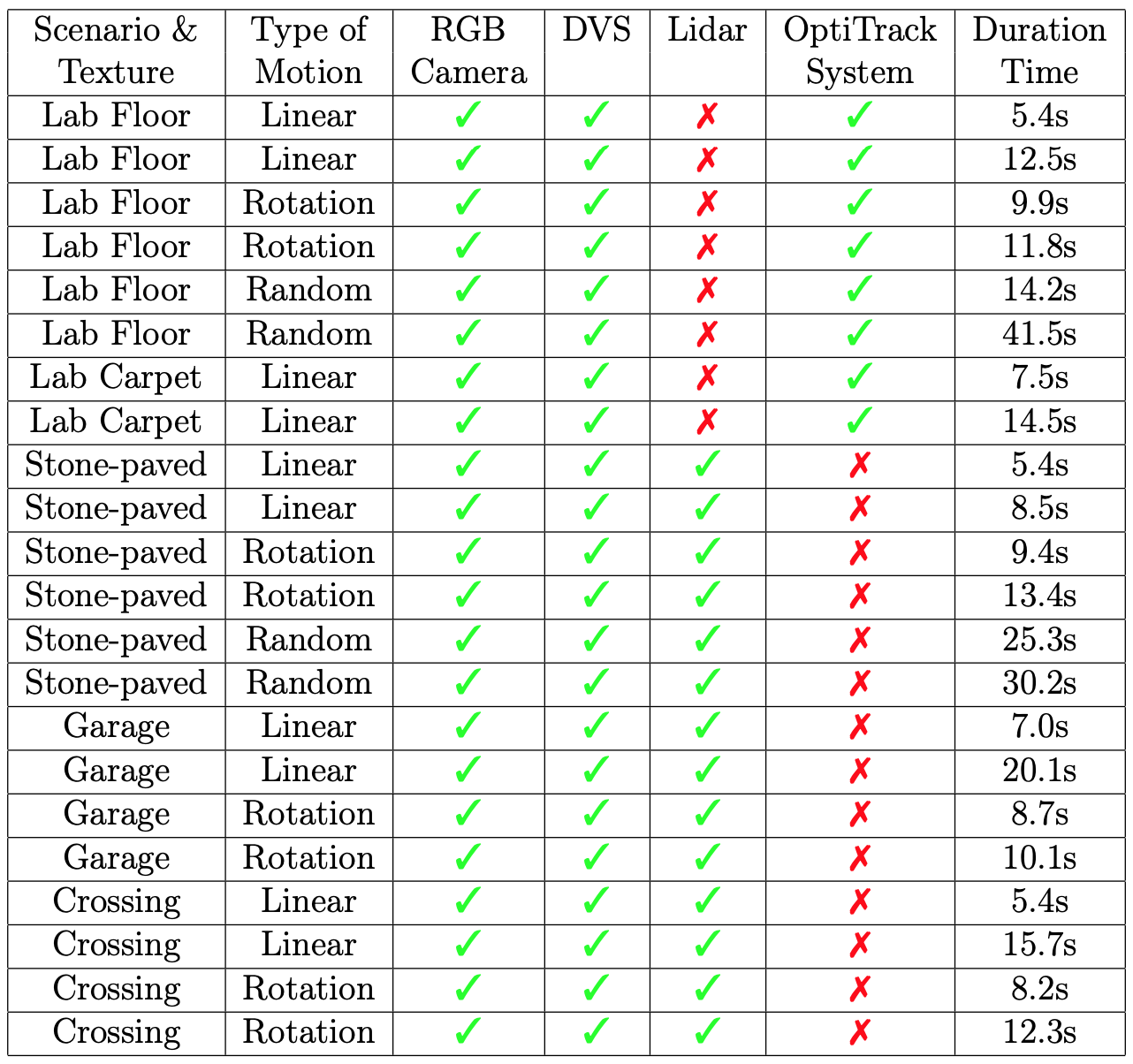

Here, we list a basket of recorded datasets under different scenarios with different combinations of sensors in Table, and their textures are displayed in Figure 2.

For each data stream, we present in text/png files and binary files (rosbag). While their content is identical, some of them are better suited for particular applications. For prototyping, inspection, and testing, it is recommended to use the text files, since they can be loaded easily using Python or Matlab. The binary rosbag files are prepared for applications that are intended to be executed on a real system.

Here we list the detailed infomation in text/png format as follows.

- calib.txt Intrinsic parameters (fx fy cx cy k1 k2 p1 p2 k3).

- pose.txt One measurement per line (timestamp px py pz qx qy qz qw).

- dvs/events.txt One event per line (timestamp x y polarity).

- dvs/imu.txt One measurement per line (timestamp ax ay az gx gy gz).

- dvs/image_raw/*.png Grey images with resolution of 346*260.

- camera/image_raw/*.png Grey images with resolution of 2448*2048.

Here we list the detailed infomation in binary format as follows.

- camera–calibration contains intrinsic parameters.

- vrpn contains pose information of the vehicle as ground truth.

- velodyne contains pose information of the vehicle as ground truth.

- rpgdvsros contains event streams, IMU streams, and grey images.

- pointgrey contains grey images with high resolution.

Conclusions

Given the small depth-of-scene, it is necessary to process images at a very high frame rate if elevated vehicle speeds are to be estimated. Therefore, our efforts consist of improving computational efficiency, accuracy, robustness and employing event-based cameras to obtain very low-latency perception, and unlocking the unexplored potential towards accurate visual odometry in dynamic scenarios in conjunction with our globally optimal registration approach. The dataset we collected will be a solid foundation to conduct further research on visual odometry for differential wheeled vehicles.