A project of the Robotics 2017 class of the School of Information Science and Technology (SIST) of ShanghaiTech University. Course Instructor: Prof. Sören Schwertfeger.

Peihong Yu and Jia Zheng

Introduction

Traditional approaches to stereo visual SLAM rely on point correspondences to estimate camera trajectory and build a map of the environment, consequently, their performance deteriorates in low-textured scenes where it is difficult to find a large or well-distributed set of point features. We propose a stereo visual SLAM systems that use line segments to work robustly. We use LSD line detector to detect line, and LBD line descriptor to match lines, and compute relative pose by trifocal tensor between two adjacent frames.

Theory

Trifocal Tensor

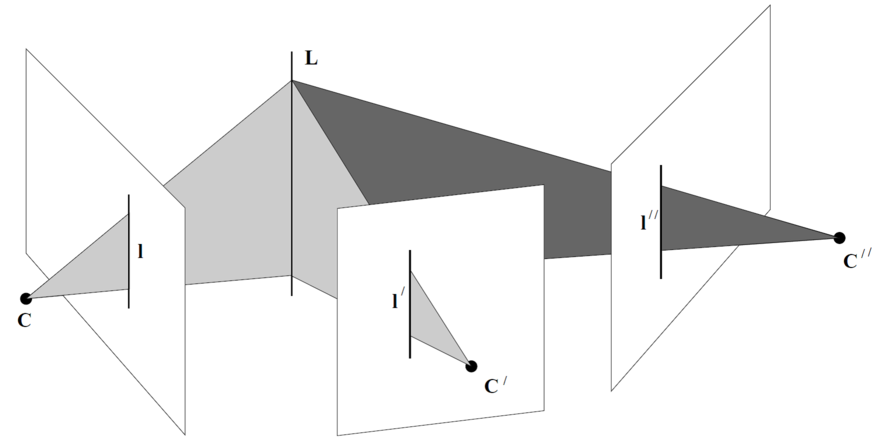

Trifocal Tensor describes all the projective geometric relations between three views that are independent of scene structure.

The constrain can be described as lT = l'T [T1, T2, T3] l''T . The ls are the 2D triplets of the 3D line L, and the set of three matrices {T1, T2, T3} constitute the trifocal tensor in matrix notation, which are function of the camera matrices.

Trifocal Tensor in stereo

In our stereo situation, there are 4 subviews in total from these two stereo frames, so each line has 4 projective images. The stereo are calibrated and the triplet measurements can be calculated, the only unknowns are the Rotaton and Translation between two stereo frames, which are also what we want to solve.

We choose 2 views in the first stereo frame, and one view from its adjacent stereo frame to construct our trifocal tensor constrain. In minimal case, we just need 2 lines, from which we can get 4 trifocal tensor constrains, to recover the transformation between two stereo frames.

Experiments

Simulation Result

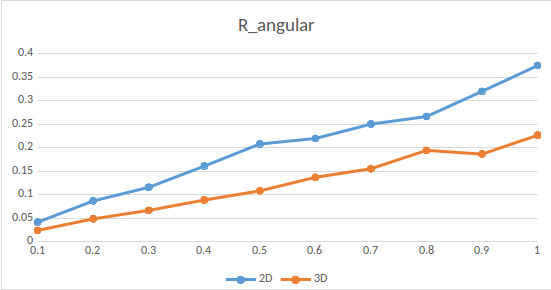

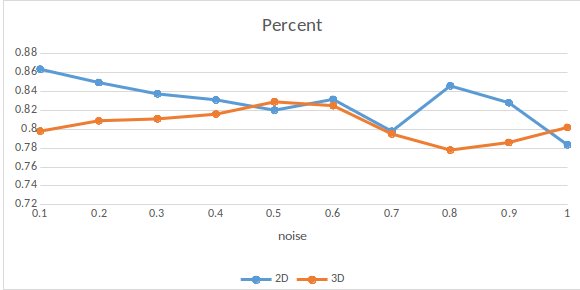

We compare our solver with another solver which takes 3D lines as input.

The x-axis presents different noise in pixel we add at the endpoints of the 2D triplets, the R_angular mean the angular error between the rotation matrix we solved and the ground truth rotation matrix, and the percent result means the acceptable solution rate, whose error angular of rotation is under 1 degree.

We can see that with noise increasing, the performance of solvers go down. Our solver is less accurate than the 3D-3D solver but more robust than it.

Real Data Experiments

We implemented a visual odometry pipeline with our trifocal tensor solver based on ORB2 SLAM framework. The ORB2 framework is working with point features, so we modified all the functions related to our work.

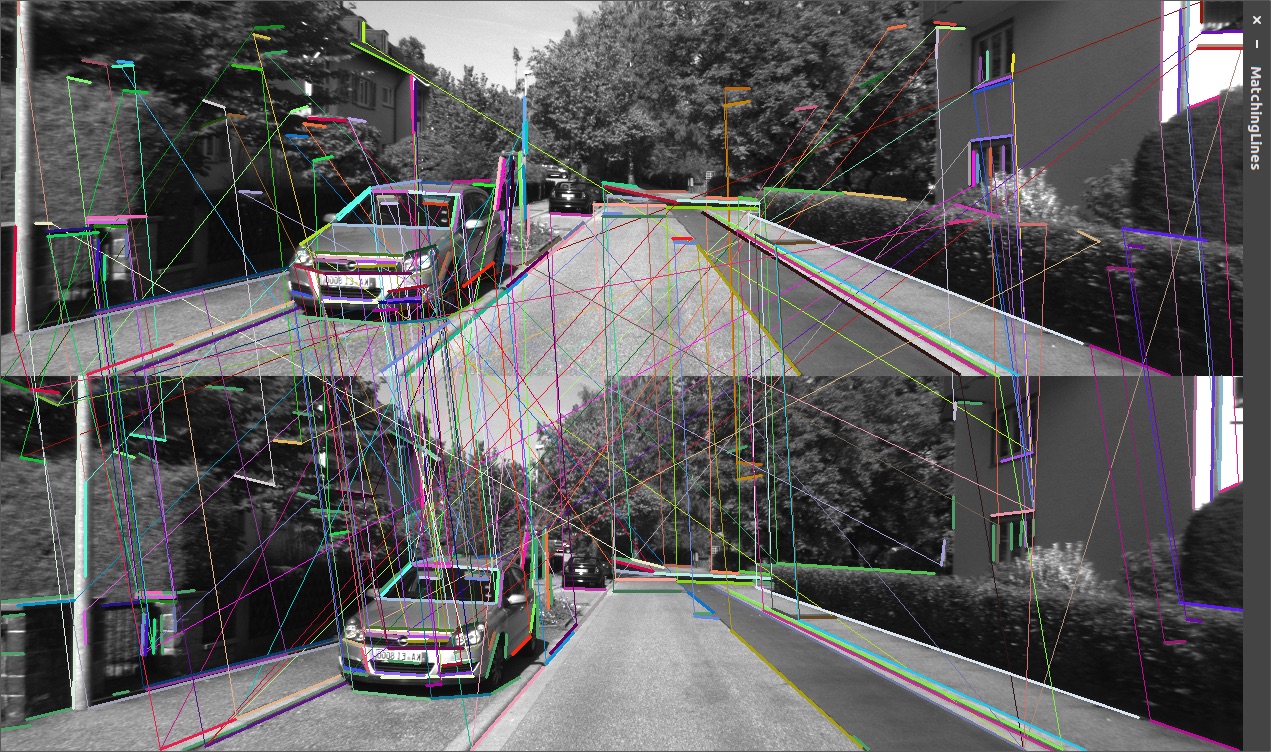

Here are some line matching results with LBD line descriptor, as we can see from the picture, the results is not very good because of motion blur and many repeated features on trees and grass.

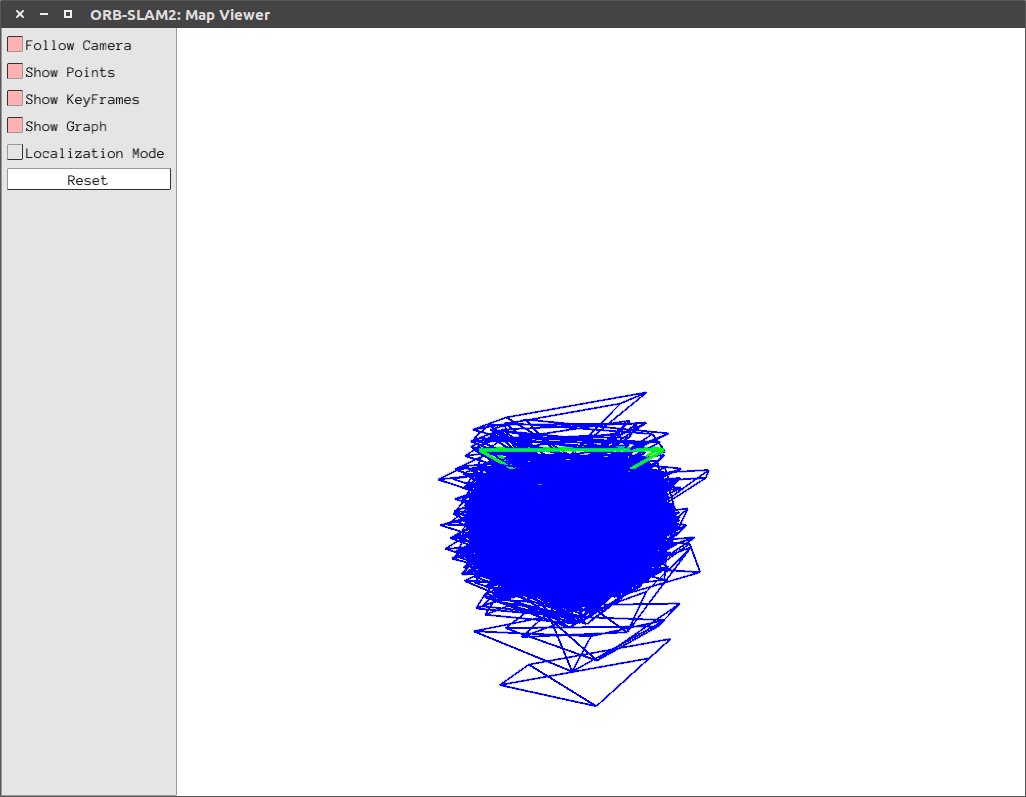

Here are some screenshots from our running code. Our trajectory is bad honestly, the reasons we think are:

* Firstly, the triplets detected from images are not good, there are many triplets on the trees and grass, which are very unstable and inaccurate.

* Secondly, the line matching results are not good, there are many miss matching as we can see from above.

* Thirdly, our solver is not mature yet, as we can see from the simulation test, we can get good results with low noise, but with noise increasing, our performance goes down.

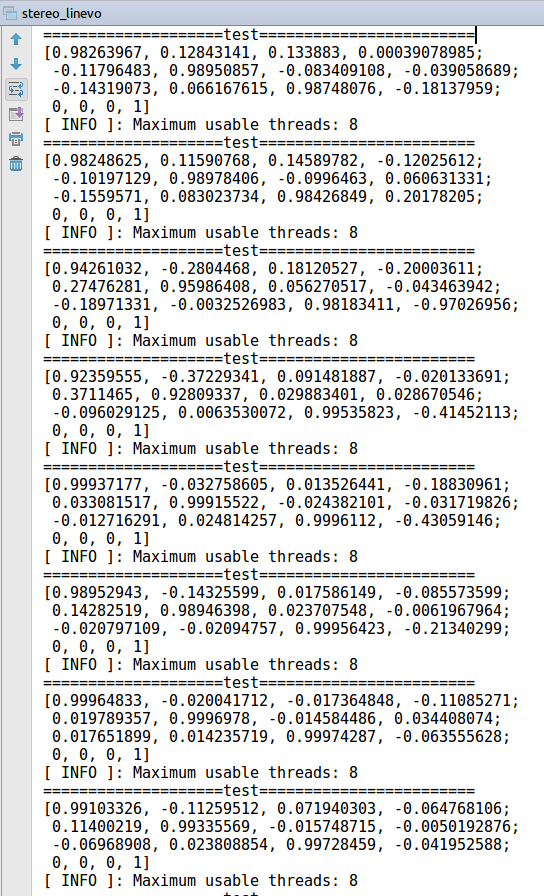

With above reasons, we add RANSAC and pose optimization with all the inliers. From the outputs below, we can see the rotation matrix looks fine but the translation is still jumping back and force. We think with more suitable dataset and further bundle adjust part, the performance can be improved, but these are not finished yet.