A project of the Robotics 2016 class of the School of Information Science and Technology (SIST) of ShanghaiTech University. Course Instructor: Prof. Sören Schwertfeger.

Yu Huangjie Chen Xin Liu Xuhui

Introduction

We proposed a system made up of a robotic arm and a depth camera, aiming at grasping multiple objects and human interaction. It’s a fundamental task for robotic arm to grasp objects for various applications, e.g. artificial intelligience. Further more, we make use of leap motion for human interaction, allowing robotic arm to mimic human arm. Our system mainly consists of two parts, vision detection and mechanical control. Vision detection was used to detect objects and extract representative point. Machanical control is responsible for moving the arm correctly and precisely.

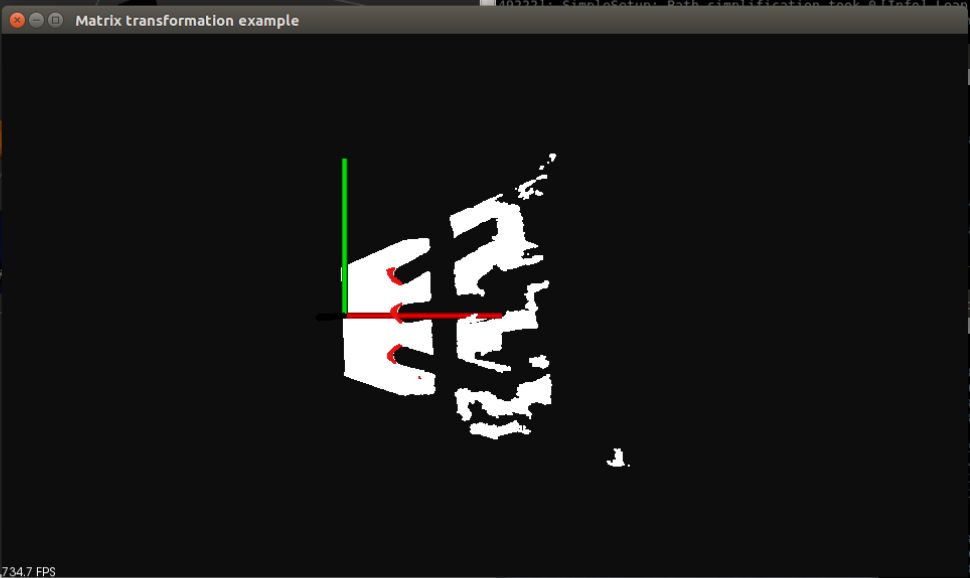

Point cloud cluster

Blob detection result

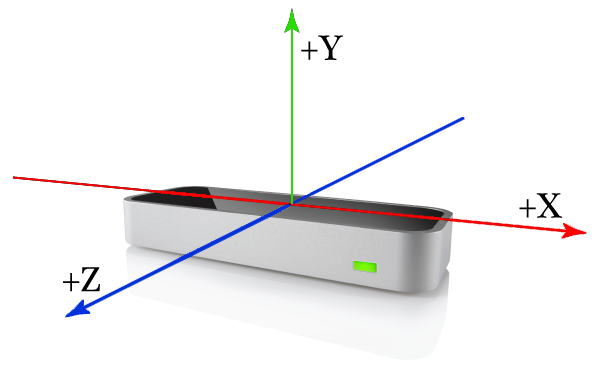

Leap Motion

The Leap Motion system recognizes and tracks hands and fingers. The device operates in an intimate proximity with high precision and tracking frame rate and reports discrete positions and motion. The Leap Motion controller uses optical sensors and infrared light. The sensors are directed along the y-axis – upward when the controller is in its standard operating position – and have a field of view of about 150 degrees. The effective range of the Leap Motion Controller extends from approximately 25 to 600 millimeters above the device (1 inch to 2 feet). Detection and tracking work best when the controller has a clear, high-contrast view of an object’s silhouette. The Leap Motion software combines its sensor data with an internal model of the human hand to help cope with challenging tracking conditions.

Video Presentation